-

Notifications

You must be signed in to change notification settings - Fork 0

Lipsync

VTube Studio can use your microphone to analyze your speech and calculate Live2D model mouth forms based on it.

You can select two lipsync types:

-

Simple Lipsync

- Legacy option, Windows-only, based on Occulus VR Lipsync

- NOT RECOMMENDED, use Advanced Lipsync instead.

-

Advanced Lipsync:

- Based on uLipSync

- Fast and accurate, can be calibrated using your own voice so it can accurately detect A, I, U, E, O phonemes.

- Available on all platforms (desktop and smartphone).

Note: "Simple Lipsync" will not be discussed here. If you're still using it, please consider moving on to "Advanced Lipsync". It supports the same parameters and more, is more performant and works on all platforms.

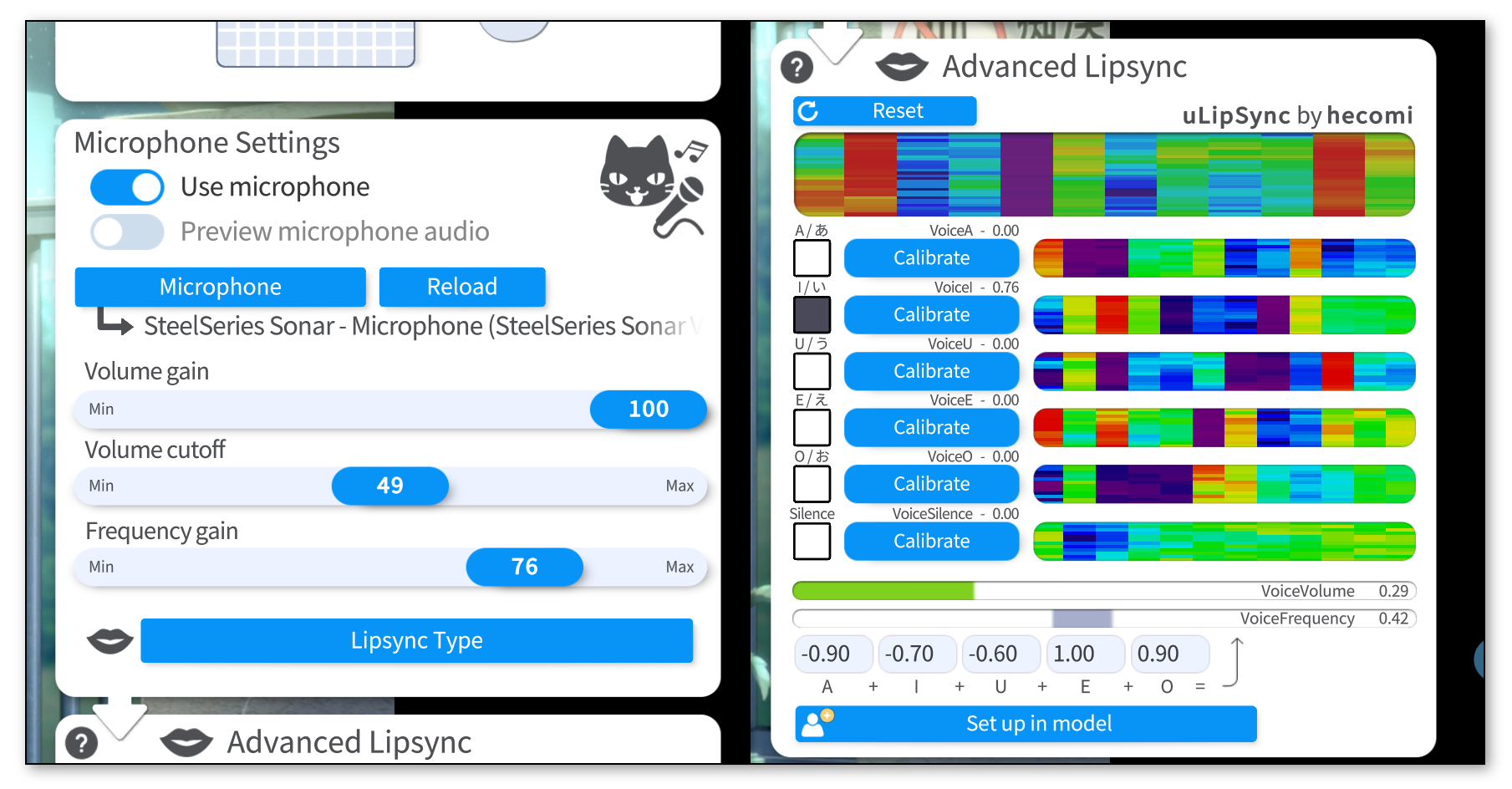

To use Advanced Lipsync select it using the "Lipsync Type" button. Then select your microphone and make sure "Use microphone" is on.

There are three slider on the main config card:

-

Volume Gain: Boost volume from microphone. Will have an effect on the

VoiceVolumeandVoiceVolumePlusMouthOpenparameters. - Volume Cutoff: Noise gate. Eliminates low-volume noise. It's probably best to keep this low or at 0 and have a noise-gate before audio is fed into VTube Studio instead.

-

Frequency Gain: Boost value for

VoiceFrequencyandVoiceFrequencyPlusMouthSmile. Those parameters are explained below.

If your microphone lags behind, you can click the "Reload" button to restart the microphone. You can also set up a hotkey for that.

To calibrate the lipsync system, click each "Calibrate" button while saying the respecting phoneme until the calibration is over. That way, the lipsync system will be calibrated to your voice. If you change your microphone or audio setup, you might want to redo the calibration.

Clicking "Reset" will reset the calibration to default values.

Make sure the calibration is good by saying all phonemes again and checking if the respective phoneme lights up on the UI.

The funny colorful visualizations shown next to the calibration buttons are related to the frequency spectrum recorded in your voice during calibration. If you'd like to learn more about the details, check the uLipSync repository.

The lipsync system outputs the following voice tracking parameters:

-

VoiceA- Between 0 and 1

- How much the

Aphoneme is detected.

-

VoiceI- Between 0 and 1

- How much the

Iphoneme is detected.

-

VoiceU- Between 0 and 1

- How much the

Uphoneme is detected.

-

VoiceE- Between 0 and 1

- How much the

Ephoneme is detected.

-

VoiceO- Between 0 and 1

- How much the

Ophoneme is detected.

-

VoiceSilence- Between 0 and 1

- 1 when "silence" is detected (based on your calibration) or when volume is very low (near 0).

-

VoiceVolume/VoiceVolumePlusMouthOpen- Between 0 and 1

- How loud the detected volume from microphone is.

- Map this to your

ParamMouthOpenLive2D parameter.

-

VoiceFrequency/VoiceFrequencyPlusMouthSmile- Between 0 and 1

- Calculated based on the detected phonemes. You can set up how the phoneme detection values are multiplied to generate this parameter.

- Map this to your

ParamMouthFormLive2D parameter.

The VoiceA/I/U/E/O should be mapped to blendshape-Live2D-parameters called ParamA, ParamI, ParamU, ParamE and ParamO that deform the mouth to the respective shape.

![]() 如果你遇到了任何该手册中没有回答的问题,请到 VTube Studio Discord

如果你遇到了任何该手册中没有回答的问题,请到 VTube Studio Discord![]() !!

!!

- 使用单个网络摄像头或iPhone Android设备控制多个模型

- 在模型间复制配置

- 载入自定义背景

- 重新着色模型和挂件

- 录制动画

- 使用OBS录制/串流

- 将数据传输至VSeeFace

- 以管理员身份启动

- 不通过Steam启动应用

- 向Mac/PC推流

- 多人联机

- Steam创意工坊

- 截图与分享

- Live2D Cubism Editor 通信

- VTube Studio设置

- VTS模型设置

- VTS模型文件

- 视觉特效

- Twitch互动

- Twitch热键触发器

- Spout2背景

- 按键表情

- 动画

- 动画、表情、面部追踪、物理等之间的交互

- Google Mediapipe面部捕捉

- NVIDIA Broadcast面部捕捉

- Tobii 眼球捕捉

- 手部捕捉

- 音频口型同步

- 挂件系统

- Live2D挂件

- 层间挂件固定

- 挂件场景和挂件热键

- 添加特殊的ArtMesh功能

- 屏幕光源着色

- VNet网络安全

- 插件 (YouTube, Twitch等平台)

- Web挂件

- Web挂件插件