-

Notifications

You must be signed in to change notification settings - Fork 294

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Update densenet.py #25

base: master

Are you sure you want to change the base?

Conversation

exclude the input with the feature maps in the concatenation after the last desnblock

|

could you explain this more clearly? |

|

Please refer to #23 |

|

Hmm, the number of parameters remains the same, but the problem of DenseNets is that even though number of parameter remains the same, the connections determine performance.. I have seen that the performance of this model improves severely with the correct implementation of the Dense block (as @ahundt and I will attest to for the performance boost on segmentation tasks). Unless I can execute the model with this change on the same dataset, and can observe noticeably improved performance, I don't want to merge this. @jrwin Can you train a FCN model with and without this change on the same dataset to see how much the performance changed? Also, @ahundt, is your dataset small enough to train within a few hours? Mine takes days, and I don't have access to the server I used before for the next 2 weeks, so I wont be able to run on my dataset. |

|

@titu1994 , we have different use case. I try to do the liver and lesion segmentation for CT slice. |

|

These small modifications can definitely make a huge difference in performance! Could you clarify your wording here, did you mean to say:

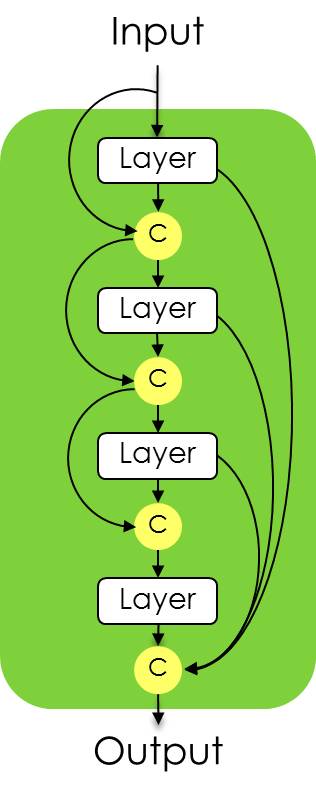

In other words, you're running with this patch applied, correct? Looking at the large graph @titu1994 printed out in #23 It looks like you're removing one input from concatenate_101. (Could you print the layer name on your change to confirm?) Here is a screenshot from the 100 layers paper: And here is the screenshot from the original densenet paper:

The correctness of the current implementation (without this pull request) proves to be the case even when comparing against the original author's published code, according to how I read it: Even with all of the above said, it is entirely conceivable that your change improves performance despite differing from what is implemented in the paper. :-) Your PR here is certainly worth additional testing, but we would need some hard numbers on a standard dataset, see https://github.com/aurora95/Keras-FCN perhaps for code to train on pascal voc, and even if it does in fact improve results applying this change would probably need to be an optional flag, clearly labeling the flag as a deliberate departure from the paper. This is so people can still use a network equivalent to the original paper's implementation. |

|

Ah wait now I'm a little mixed up, I have a meeting will look some more after |

|

@titu1994 I looked into this further and you made this exact change in keras contrib :-) keras-team/keras-contrib@fe02032 After further review, I believe this PR is correct. |

|

I would still like a performance test. As far as I remember, I reverted that commit cause it diminished performance on the private dataset I was using. |

@ahundt , it is right. But my densenetFCN is not the original one with 100 layers. For comparison, the following was the ploted model of 'x_up'. Lets call it Doutput: Base on my evaluation of the Liver segmentation on the LiTS dataset, the NDoutput get 0.955 Dice per case , and the Doutput get 0.935 Dice per case. I am still confused now, for the Doutput combines more info(features) than the NDoutput, but Doutput got no better results on my evaluation. |

exclude the input with the feature maps in the concatenation after the last desnblock