English | 中文

About TensorSpace 🤔: TensorSpace Github

TensorSpace-Converter is a tool used to generate a TensorSpace compatible model from a pre-trained model built by TensorFlow, Keras and TensorFlow.js. TensorSpace-Converter includes the functions of: extracting information from hidden layers, matching intermediate data based on the configurations and exporting preprocessed TensorSpace compatible model. TensorSpace simplifies the preprocess and helps developers to focus on the development of model visualization.

- Motivation

- Getting Started

- Running with Docker

- Converter API

- Converter Usage Examples

- Development

- Contributors

- Contact

- License

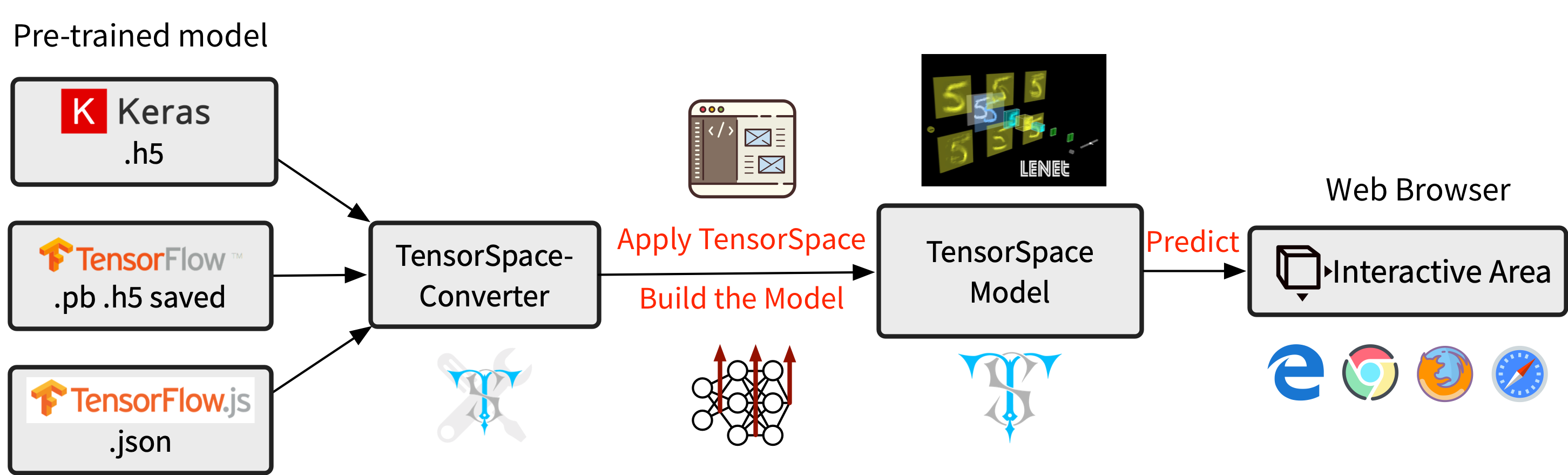

TensorSpace is a JavaScript framework used to 3D visualize deep learning models built by TensorFlow, Keras and TensorFlow.js. Before applying TensorSpace to the pre-trained model, there is an important pipeline - TensorSpace model preprocessing ( Checkout this article for more information about TensorSpace preprocessing ). TensorSpace-Converter is designed to simplify the model preprocessing and generate a TensorSpace compatible model easily and quickly.

Without TensorSpace-Converter, the developer needs to be expert on the pre-trained model and machine learning library the model used. For example, if the developer has an LeNet pre-trained model built by tf.keras, it is required to know the structure of the LeNet network as well as how to implement a new multi-output model by tf.keras. Now, with TensorSpace-Converter, it only needs some commands to complete the preprocessing process. For example, the developer only needs to use the commands to preprocess a tf.keras pre-trained model.

As a component of TensorSpace ecosystem, TensorSpace-Converter simplifies the TensorSpace preprocess, release the workloads from learning how to generate TensorSpace compatible model. As a development tool, TensorSpace-Converter helps to separate the work of model training and model visualization.

Fig. 1 - TensorSpace-Converter Usage

Install the tensorspacejs pip package:

$ pip install tensorspacejsIf tensorspacejs is installed successfully, you can check the TensorSpace-Converter version by using the command:

$ tensorspacejs_converter -vThen init TensorSpace Converter (important step):

$ tensorspacejs_converter -init- Note

TensorSpace-Converter requires to run under Python 3.6, Node 11.3+, NPM 6.5+. If you have other pre-installed Python version in your local environment, we suggest you to create a new fresh virtual environment. For example, the conda commands is like:

$ conda create -n envname python=3.6

$ source activate envname

$ pip install tensorspacejsThe following part introduces the usage and workflow on:

- How to use TensorSpace-Converter to convert a pre-trained model;

- How to apply TensorSpace to the converted model for model visualization.

An MNIST-digit tf.keras model is used as an example in the tutorial. The sample files used in the tutorial includes pre-trained tf.keras model, TensorSpace-Converter script and TensorSpace visualization code.

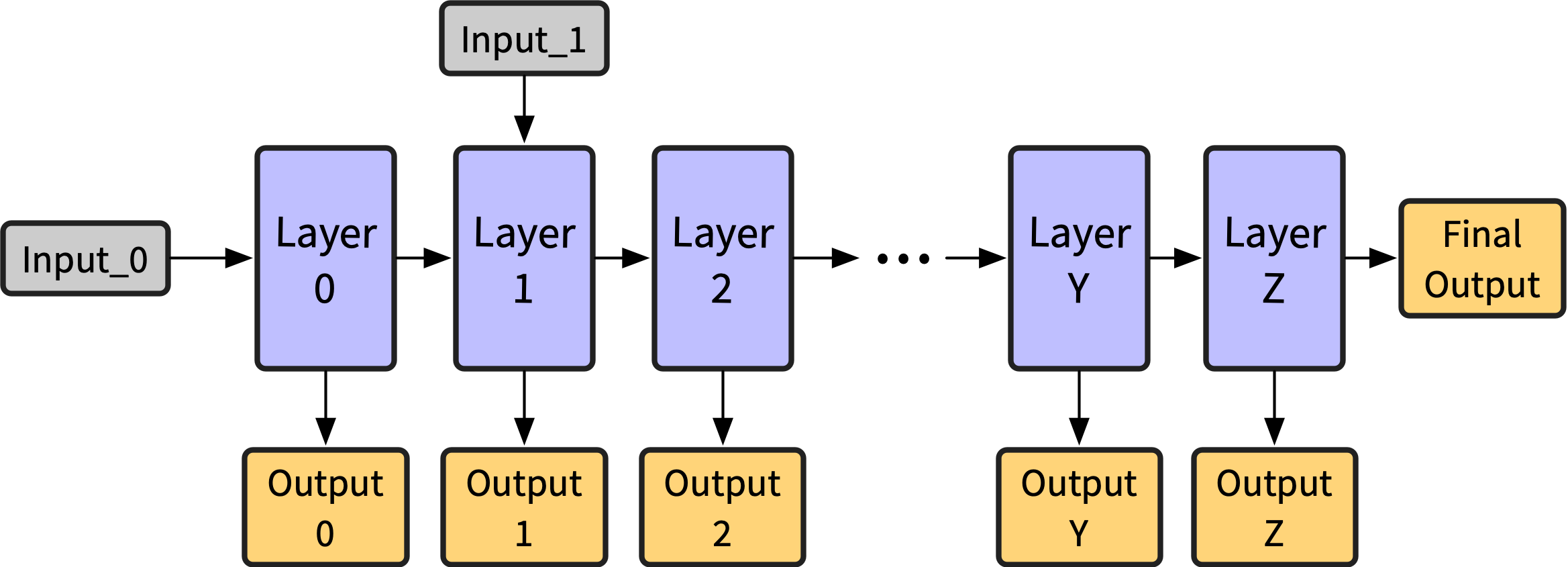

Fig. 2 - TensorSpace-Converter Workflow

TensorSpace-Converter will convert an input model into a multi-output model, checkout this article for more information about multi-output model and model preprocessing.

$ tensorspacejs_converter \

--input_model_from="tensorflow" \

--input_model_format="tf_keras" \

--output_layer_names="conv_1,maxpool_1,conv_2,maxpool_2,dense_1,dense_2,softmax" \

./PATH/TO/MODEL/tf_keras_model.h5 \

./PATH/TO/SAVE/DIRFig. 3 - converted multi-output model

model.load({

type: "tensorflow",

url: "/PATH/TO/MODEL/model.json"

});Fig. 4 - LeNet Visualization

Establishing TensorSpace-Converter runtime environment is a tedious topic? Dockerize it!

Here is a TensorSpace-Converter Dockerfile, you can use it to build a out-of-box TensorSpace-Converter image. We also provide some easy to use scripts to init (init_docker_converter.sh) and run (run_docker_converter.sh) tensorspacejs docker image.

- To init

tensorspacejsDocker image (make sure start Docker daemon before init the image):

cd ./docker

bash init_docker_converter.sh- To run docker image

Put TensorSpace-Converter script and model assets in a WORK_DIR, and execute run_docker_converter.sh to run tensorspacejs image:

cd ./docker

bash run_docker_converter.sh --work_dir PATH/TO/WORK_DIRCheckout this Docker Example for more practical usage of running TensorSpace-Converter with Docker.

Sample TensorSpace-Converter script:

$ tensorspacejs_converter \

--input_model_from="XXX" \

--input_model_format="YYY" \

--output_layer_names="EEE1,EEE2,EEE3" \

input_path \

output_pathArguments explanation:

| Positional Arguments | Description |

|---|---|

input_path |

Path for model input artifacts. Checkout Usage Example for how to set this attribute for different kinds of models. |

output_path |

Folder for all output artifacts. |

| Options | Description |

|---|---|

--input_model_from |

Configure the training library for pre-trained model, use: tensorflow for TensorFlow, keras for Keras, tfjs for TensorFlow.js. |

--input_model_format |

The format of input model, checkout Usage Example for how to set this attribute for different kinds of models. |

--output_layer_names |

The names of the layer which will be visualized in TensorSpace, separated by comma ",". |

This section introduces the usage of TensorSpace-Converter for different types of pre-trained model from TensorFlow, Keras, TensorFlow.js.

A pre-trained model built by TensorFlow can be saved as saved model, frozen model, combined HDF5 model or separated HDF5 model. Use different TensorSpace-Converter commands for different kinds of TensorFlow model formats. TensorSpace-Converter collects the data from tensor, then use the outputs as the inputs of layer of TensorSpace visualization. The developer can collect all necessary tensor names and set the name list as output_layer_names.

For a combined HDF5 model, topology and weights are saved in a combined HDF5 file xxx.h5. Set input_model_format to be tf_keras. The sample command script should be like:

$ tensorspacejs_converter \

--input_model_from="tensorflow" \

--input_model_format="tf_keras" \

--output_layer_names="layer1Name,layer2Name,layer3Name" \

./PATH/TO/MODEL/xxx.h5 \

./PATH/TO/SAVE/DIRFor a separated HDF5 model, topology and weights are saved in separate files, topology file xxx.json and weights file xxx.h5. Set input_model_format to be tf_keras_separated. In this case, the model have two input files, merge two file's paths and separate them with comma (.json first, .h5 last), and then set the combined path to positional argument input_path. The sample command script should be like:

$ tensorspacejs_converter \

--input_model_from="tensorflow" \

--input_model_format="tf_keras_separated" \

--output_layer_names="layer1Name,layer2Name,layer3Name" \

./PATH/TO/MODEL/xxx.json,./PATH/TO/MODEL/eee.h5 \

./PATH/TO/SAVE/DIRFor a TensorFlow saved model. Set input_model_format to be tf_saved. The sample command script should be like:

$ tensorspacejs_converter \

--input_model_from="tensorflow" \

--input_model_format="tf_saved" \

--output_layer_names="layer1Name,layer2Name,layer3Name" \

./PATH/TO/SAVED/MODEL/FOLDER \

./PATH/TO/SAVE/DIRFor a TensorFlow frozen model. Set input_model_format to be tf_frozen. The sample command script should be like:

$ tensorspacejs_converter \

--input_model_from="tensorflow" \

--input_model_format="tf_frozen" \

--output_layer_names="layer1Name,layer2Name,layer3Name" \

./PATH/TO/MODEL/xxx.pb \

./PATH/TO/SAVE/DIRCheckout this TensorFlow Tutorial for more practical usage of TensorSpace-Converter for TensorFlow models.

A pre-trained model built by Keras, may have two formats: topology and weights are saved in a single HDF5 file, or topology and weights are saved in separated files. Use different TensorSpace-Converter commands for these two saved Keras models.

For a Keras model, topology and weights are saved in a single HDF5 file, i.e. xxx.h5. Set input_model_format to be topology_weights_combined. The sample command script should be like:

$ tensorspacejs_converter \

--input_model_from="keras" \

--input_model_format="topology_weights_combined" \

--output_layer_names="layer1Name,layer2Name,layer3Name" \

./PATH/TO/MODEL/xxx.h5 \

./PATH/TO/SAVE/DIRFor a Keras model, topology and weights are saved in separated files, i.e. a topology file xxx.json and a weights file xxx.h5. Set input_model_format to be topology_weights_separated. In this case, the model have two input files, merge two file's paths and separate them with comma (.json first, .h5 last), and then set the combined path to positional argument input_path. The sample command script should be like:

$ tensorspacejs_converter \

--input_model_from="keras" \

--input_model_format="topology_weights_separated" \

--output_layer_names="layer1Name,layer2Name,layer3Name" \

./PATH/TO/MODEL/xxx.json,./PATH/TO/MODEL/eee.h5 \

./PATH/TO/SAVE/DIRCheckout this Keras Tutorial for more practical usage of TensorSpace-Converter for Keras models.

A pre-trained model built by TensorFlow.js, may have a topology file xxx.json and a weights file xxx.weight.bin. To converter the model with TensorSpace-Converter, the two files should be put in the same folder and set topology file's path to input_path. The sample command script should be like:

$ tensorspacejs_converter \

--input_model_from="tfjs" \

--output_layer_names="layer1Name,layer2Name,layer3Name" \

./PATH/TO/MODEL/xxx.json \

./PATH/TO/SAVE/DIRCheckout this TensorFlow.js tutorial for more practical usage of TensorSpace-Converter for TensorFlow.js models.

- Ensure to have a fresh

python=3.6,node>=11.3,npm>=6.5,tensorflowjs=0.8.0environment.

- To setup a TensorSpace-Converter development environment:

git clone https://github.com/tensorspace-team/tensorspace-converter.git

cd tensorspace-converter

bash init-converter-dev.sh

npm install- To build TensorSpace-Converter pip package (Build files can be find in

distfolder):

bash build-pip-package.sh- To install local build files:

pip install dist/tensorspacejs-VERSION-py3-none-any.whl

tensorspacejs_converter -v- Grand permissions to test scripts before running test scripts:

bash ./test/grandPermission.sh- To run end-to-end test (tests shall be run in pre-set environment):

npm run testThanks goes to these wonderful people (emoji key):

Chenhua Zhu 💻 🎨 📖 💡 |

syt123450 💻 🎨 📖 💡 |

Qi(Nora) 🎨 |

BoTime 💻 💡 |

YaoXing Liu 📖 🎨 |

|---|

If you have any issue or doubt, feel free to contact us by:

- Email: [email protected]

- GitHub Issues: create issue

- Slack: #questions

- Gitter: #Lobby