We are excited to share a guide for a Kotlin Library that brings front the benefits of Llama Stack to your Android device. This library is a set of SDKs that provide a simple and effective way to integrate AI capabilities into your Android app whether it is local (on-device) or remote inference.

Features:

- Local Inferencing: Run Llama models purely on-device with real-time processing. We currently utilize ExecuTorch as the local inference distributor and may support others in the future.

- ExecuTorch is a complete end-to-end solution within the PyTorch framework for inferencing capabilities on-device with high portability and seamless performance.

- Remote Inferencing: Perform inferencing tasks remotely with Llama models hosted on a remote connection (or serverless localhost).

- Simple Integration: With easy-to-use APIs, a developer can quickly integrate Llama Stack in their Android app. The difference with local vs remote inferencing is also minimal.

Latest Release Notes: v0.2.2

Note: The current recommended version is 0.2.2 Llama Stack server with 0.2.2 Kotlin client SDK.

Tagged releases are stable versions of the project. While we strive to maintain a stable main branch, it's not guaranteed to be free of bugs or issues.

Check out our demo app to see how to integrate Llama Stack into your Android app: Android Demo App

The key files in the app are ExampleLlamaStackLocalInference.kt, ExampleLlamaStackRemoteInference.kts, and MainActivity.java. With encompassed business logic, the app shows how to use Llama Stack for both the environments.

Add the following dependency in your build.gradle.kts file:

dependencies {

implementation("com.llama.llamastack:llama-stack-client-kotlin:0.2.2")

}

This will download jar files in your gradle cache in a directory like ~/.gradle/caches/modules-2/files-2.1/com.llama.llamastack/

If you plan on doing remote inferencing this is sufficient to get started.

For local inferencing, it is required to include the ExecuTorch library into your app.

Include the ExecuTorch library by:

- Download the

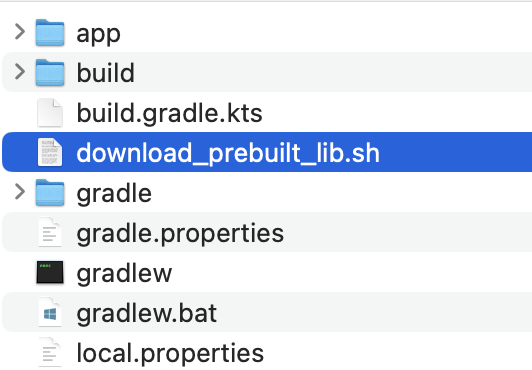

download-prebuilt-et-lib.shscript file from the llama-stack-client-kotlin-client-local directory to your local machine. - Move the script to the top level of your Android app where the app directory resides:

- Run

sh download-prebuilt-et-lib.shto create anapp/libsdirectory and download theexecutorch.aarin that path. This generates an ExecuTorch library for the XNNPACK delegate with commit: 0a12e33. - Add the

executorch.aardependency in yourbuild.gradle.ktsfile:

dependencies {

...

implementation(files("libs/executorch.aar"))

...

}

See other dependencies for local RAG in Android app README.

Breaking down the demo app, this section will show the core pieces that are used to initialize and run inference with Llama Stack using the Kotlin library.

Start a Llama Stack server on localhost. Here is an example of how you can do this using the firework.ai distribution:

conda create -n stack-fireworks python=3.10

conda activate stack-fireworks

pip install --no-cache llama-stack==0.2.2

llama stack build --template fireworks --image-type conda

export FIREWORKS_API_KEY=<SOME_KEY>

llama stack run fireworks --port 5050

Ensure the Llama Stack server version is the same as the Kotlin SDK Library for maximum compatibility.

Other inference providers: Table

How to set remote localhost in Demo App: Settings

A client serves as the primary interface for interacting with a specific inference type and its associated parameters. Only after client is initialized then you can configure and start inferences.

| Local Inference | Remote Inference |

|---|---|

|

|

Llama Stack agent is capable of running multi-turn inference using both customized and built-in tools.

Create the agent configuration:

val agentConfig =

AgentConfig.builder()

.enableSessionPersistence(false)

.instructions("You're a helpful assistant")

.maxInferIters(100)

.model("meta-llama/Llama-3.1-8B-Instruct")

.samplingParams(

SamplingParams.builder()

.strategy(

SamplingParams.Strategy.ofGreedySampling()

)

.build()

)

.toolChoice(AgentConfig.ToolChoice.AUTO)

.toolPromptFormat(AgentConfig.ToolPromptFormat.JSON)

.clientTools(

listOf(

CustomTools.getCreateCalendarEventTool() #Custom local tools

)

)

.build()

Create the agent:

val agentService = client!!.agents()

val agentCreateResponse = agentService.create(

AgentCreateParams.builder()

.agentConfig(agentConfig)

.build(),

)

val agentId = agentCreateResponse.agentId()

Create the session:

val sessionService = agentService.session()

val agentSessionCreateResponse = sessionService.create(

AgentSessionCreateParams.builder()

.agentId(agentId)

.sessionName("test-session")

.build()

)

val sessionId = agentSessionCreateResponse.sessionId()

Create a turn:

val turnService = agentService.turn()

val agentTurnCreateResponseStream = turnService.createStreaming(

AgentTurnCreateParams.builder()

.agentId(agentId)

.messages(

listOf(

AgentTurnCreateParams.Message.ofUser(

UserMessage.builder()

.content(InterleavedContent.ofString("What is the capital of France?"))

.build()

)

)

.sessionId(sessionId)

.build()

)

Handle the stream chunk callback:

agentTurnCreateResponseStream.use {

agentTurnCreateResponseStream.asSequence().forEach {

val agentResponsePayload = it.responseStreamChunk()?.event()?.payload()

if (agentResponsePayload != null) {

when {

agentResponsePayload.isAgentTurnResponseTurnStart() -> {

// Handle Turn Start Payload

}

agentResponsePayload.isAgentTurnResponseStepStart() -> {

// Handle Step Start Payload

}

agentResponsePayload.isAgentTurnResponseStepProgress() -> {

// Handle Step Progress Payload

}

agentResponsePayload.isAgentTurnResponseStepComplete() -> {

// Handle Step Complete Payload

}

agentResponsePayload.isAgentTurnResponseTurnComplete() -> {

// Handle Turn Complete Payload

}

}

}

}

}

Agents are called and used similar to remote agents however local agents support a few capabilities which are verified:

- Inferencing with turns

- Custom tool calling like createCalendarEvent (see demo app)

- Local RAG.

We do plan on building out more capabilities.

The beauty of Llama Stack is that most of the code is similar to remote agents. There are a few additional lines in agent configurations which are noted below otherwise the code is the same!

Create the agent configuration:

val agentConfig =

AgentConfig.builder()

.enableSessionPersistence(false)

.instructions(instruction)

.maxInferIters(100)

.model(modelName)

.toolChoice(AgentConfig.ToolChoice.AUTO)

.toolPromptFormat(toolPromptFormat)

.clientTools(

clientTools

)

.putAdditionalProperty("modelPath", JsonValue.from(modelPath))

.putAdditionalProperty("tokenizerPath", JsonValue.from(tokenizerPath))

.build()

Create the agent: Same as remote

Create the session: Same as remote

Create a turn: Same as remote

Handle the stream chunk callback: Same as remote

More examples can be found in our demo app

RAG is a technique used to leverage capabilities of LLMs by augmenting their knowledge with a particular document or source that is often local or private. This enables LLMs to reason about topics that are beyond their trained data. This is beneficial for the user since they can use this technique to extrapolate data or ask question about a large document without fully reading it. The steps for implementing RAG are the following:

- Break document into chunks: We break the document into more manageable pieces. We do this for efficient embedding generation (next step) and improve contextual understanding with LLMs. There are several strategies like: fixed-size, sentence-level, paragraph-level, sliding window, and many others. In Llama Stack we have employed the sliding window strategy since it generates overlapping chunks.

- Generate embeddings: Convert each of the chunks into numerical representations (embeddings). For example, "The quick brown fox jumps over the lazy dog" could be converted to something like [0.23, -0.14, 0.67, -0.32, 0.11, ... , 0.45] .

- Store in vector database: Store these embedding chunks into a vector DB to be able to do fast similarity search when user provides a prompt.

After this, when the framework receives a user prompt then it's converted into an embedding and similar search is done in the vector db. The similar neighboring chunks are then added in as part of the system prompt to the LLM. The LLM is now able to generate a relevant response based on the chunks from the document.

For the remote module, we expect the embedding generation to be done on the server side. The server will have the flexibility to use any sentence embedding model+framework they'd like and the SDK will support the passing of embedded vectors and storing them in a Vector DB. We need to create a tool that will be called by the agent to generate the embeddings and store them in the Vector DB. The tool will be called with the following parameters:

Create documents

val documents = Document.builder()

.documentId("num$i")

.content(uri)

.content(dataUri)

.mimeType("text/plain")

.metadata(metadata)

.build()

Register a vector database

val vectorDbId = "test-vector-db-${UUID.randomUUID().toString().replace("-", "")}"

client.vectorDbs().register(

VectorDbRegisterParams.builder()

.providerId(providerId)

.vectorDbId(vectorDbId)

.embeddingModel("all-MiniLM-L6-v2")

.embeddingDimension(384)

.build()

)

Insert the documents into the vector database

client.toolRuntime().ragTool().insert(

ToolRuntimeRagToolInsertParams.builder()

.documents(documents)

.vectorDbId(vectorDbId)

.chunkSizeInTokens(512)

.build()

)

For the local module, we expect the embedding generation to be done on the Android app side. The Android developer has the flexibility to use any sentence embedding model+framework they'd like and the SDK will support the passing of embedded vectors and storing them in a Vector DB.

- On-device Vector DB: ObjectBox is a vector database specifically designed for edge use-cases. The DB created lives entirely on-device and it is optimized for similiarity search which is exactly what is needed for RAG. This popular solution is what is used in the local module today.

Create vectorDB instance:

val vectorDbId = UUID.randomUUID().toString()

client!!.vectorDbs().register(

VectorDbRegisterParams.builder()

.vectorDbId(vectorDbId)

.embeddingModel("not_required")

.build()

)

Create chunks (supports single document):

val document = Document.builder()

.documentId("1")

.content(text) // text is a string of the entire contents of the document. Done by the Android app

.metadata(Document.Metadata.builder().build())

.build()

val tagToolParams = ToolRuntimeRagToolInsertParams.builder()

.vectorDbId(vectorDbId)

.chunkSizeInTokens(chunkSizeInWords)

.documents(listOf(document))

.build();

val ragtool = client!!.toolRuntime().ragTool() as RagToolServiceLocalImpl

val chunks = ragtool.createChunks(tagToolParams)

Generate embeddings for chunks: Done in Android App

Store embedding chunks in Vector DB:

ragtool.insert(vectorDbId, embeddings, chunks)

Generate embeddings for user prompt: Done in Android App

Add to turnParams to call RAG tool call with Agent (see in-line comments for more information):

turnParams.addToolgroup(

AgentTurnCreateParams.Toolgroup.ofAgentToolGroupWithArgs(

AgentTurnCreateParams.Toolgroup.AgentToolGroupWithArgs.builder()

.name("builtin::rag/knowledge_search") // Tool name

.args(

AgentTurnCreateParams.Toolgroup.AgentToolGroupWithArgs.Args.builder()

.putAdditionalProperty("vector_db_id", JsonValue.from(vectorDbId))

.putAdditionalProperty("ragUserPromptEmbedded", JsonValue.from(ragUserPromptEmbedded)) // Embedded user prompt

.putAdditionalProperty("maxNeighborCount", JsonValue.from(3)) // # of similar neighbors to retrieve from Vector DB.

.putAdditionalProperty("ragInstruction", JsonValue.from(localRagSystemPrompt())) // RAG system prompt provided from Android app

.build()

)

.build()

)

)

// Now create a turn and handle response like in Agent section

Note

The RAG system prompt will need a RETRIEVED_CONTEXT placeholder. This will be replaced by the SDK with the contents of the similar neighbors.

An example of a RAG system prompt can be:

"You are a helpful assistant. You will be provided with retrieved context. " +

"Your answer to the user request should be based on the retrieved context." +

"Make sure you ONLY use the retrieve context to answer the question. " +

"Retrieved context: _RETRIEVED_CONTEXT_"

The Kotlin SDK also supports single image inference where the image can be a HTTP web url or captured on your local device.

Create an image inference with agent:

val agentTurnCreateResponseStream =

turnService.createStreaming(

AgentTurnCreateParams.builder()

.agentId(agentId)

.messages(

listOf(

AgentTurnCreateParams.Message.ofUser(

UserMessage.builder()

.content(InterleavedContent.ofString("What is in the image?"))

.build()

),

AgentTurnCreateParams.Message.ofUser(

UserMessage.builder()

.content(InterleavedContent.ofImageContentItem(

InterleavedContent.ImageContentItem.builder()

.image(image)

.type(JsonValue.from("image"))

.build()

))

.build()

)

)

)

.sessionId(sessionId)

.build()

)

Note that image captured on device needs to be encoded with Base64 before sending it to the model. Check out our demo app example here

With the Kotlin Library managing all the major operational logic, there are minimal to no changes when running simple chat inference for local or remote:

val result = client!!.inference().chatCompletion(

InferenceChatCompletionParams.builder()

.modelId(modelName)

.messages(listOfMessages)

.build()

)

// response contains string with response from model

var response = result.completionMessage().content().string();

For inference with a streaming response:

val result = client!!.inference().chatCompletionStreaming(

InferenceChatCompletionParams.builder()

.modelId(modelName)

.messages(listOfMessages)

.build()

)

// Response can be received as a asChatCompletionResponseStreamChunk as part of a callback.

// See Android demo app for a detailed implementation example.

Android demo app for more details: Custom Tool Calling

The purpose of this section is to share more details with users that would like to dive deeper into the Llama Stack Kotlin Library. Whether you’re interested in contributing to the open source library, debugging or just want to learn more, this section is for you!

You must complete the following steps:

- Clone the repo (

git clone https://github.com/meta-llama/llama-stack-client-kotlin.git -b release/0.1.4.1) - Port the appropriate ExecuTorch libraries over into your Llama Stack Kotlin library environment.

cd llama-stack-client-kotlin-client-local

sh download-prebuilt-et-lib.sh --unzip

Now you will notice that the jni/ , libs/, and AndroidManifest.xml files from the executorch.aar file are present in the local module. This way the local client module will be able to realize the ExecuTorch SDK.

If you’d like to contribute to the Kotlin library via development, debug, or add play around with the library with various print statements, run the following command in your terminal under the llama-stack-client-kotlin directory.

sh build-libs.sh

Output: .jar files located in the build-jars directory

Copy the .jar files over to the lib directory in your Android app. At the same time make sure to remove the llama-stack-client-kotlin dependency within your build.gradle.kts file in your app (or if you are using the demo app) to avoid having multiple llama stack client dependencies.

Currently we provide additional properties support with local inferencing. In order to get the tokens/sec metric for each inference call, add the following code in your Android app after you run your chatCompletion inference function. The Reference app has this implementation as well:

var tps = (result._additionalProperties()["tps"] as JsonNumber).value as Float

We will be adding more properties in the future.

Requests that experience certain errors are automatically retried 2 times by default, with a short exponential backoff. Connection errors (for example, due to a network connectivity problem), 408 Request Timeout, 409 Conflict, 429 Rate Limit, and >=500 Internal errors will all be retried by default.

You can provide a maxRetries on the client builder to configure this:

val client = LlamaStackClientOkHttpClient.builder()

.fromEnv()

.maxRetries(4)

.build()Requests time out after 1 minute by default. You can configure this on the client builder:

val client = LlamaStackClientOkHttpClient.builder()

.fromEnv()

.timeout(Duration.ofSeconds(30))

.build()Requests can be routed through a proxy. You can configure this on the client builder:

val client = LlamaStackClientOkHttpClient.builder()

.fromEnv()

.proxy(new Proxy(

Type.HTTP,

new InetSocketAddress("proxy.com", 8080)

))

.build()Requests are made to the production environment by default. You can connect to other environments, like sandbox, via the client builder:

val client = LlamaStackClientOkHttpClient.builder()

.fromEnv()

.sandbox()

.build()This library throws exceptions in a single hierarchy for easy handling:

-

LlamaStackClientException- Base exception for all exceptions-

LlamaStackClientServiceException- HTTP errors with a well-formed response body we were able to parse. The exception message and the.debuggingRequestId()will be set by the server.400 BadRequestException 401 AuthenticationException 403 PermissionDeniedException 404 NotFoundException 422 UnprocessableEntityException 429 RateLimitException 5xx InternalServerException others UnexpectedStatusCodeException -

LlamaStackClientIoException- I/O networking errors -

LlamaStackClientInvalidDataException- any other exceptions on the client side, e.g.:- We failed to serialize the request body

- We failed to parse the response body (has access to response code and body)

-

If you encountered any bugs or issues following this guide please file a bug/issue on our Github issue tracker.

We're aware of the following issues and are working to resolve them:

- Because of the different model behavior when handling function calls and special tags such as "ipython", Llama Stack currently returning streaming events payload for Llama 3.2 1B/3B models as textDelta object rather than toolCallDelta object when making a tool call. At the the StepComplete, the Llama Stack will still return the entire toolCall detail.

We'd like to extend our thanks to the ExecuTorch team for providing their support as we integrated ExecuTorch as one of the local inference distributors for Llama Stack. Checkout ExecuTorch Github repo for more information.

The API interface is generated using the OpenAPI standard with Stainless.