-

-

Notifications

You must be signed in to change notification settings - Fork 37

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add fix for hyperscan tokenizer #235

base: main

Are you sure you want to change the base?

Conversation

Limit punctuation regex to 3 or less punct. in a row ... no need to match on anything more Also reduce text around section regex when we have jibberish it would cause lots of bad matches - and unknown citations

Added an `if not m: continue` check to `extract_tokens()` to prevent processing invalid matches that fail when re-running the regex on extracted text. Previously, Hyperscan detected matches based on byte offsets, but some of these did not align properly when converted to Unicode string offsets. This caused `.match(text[start:end])` to return `None`, potentially leading to errors when calling `get_token(m, offset=start)`. Now, we explicitly skip such cases to ensure only valid tokens are processed.

|

Feels like the kind of PR that should really have a test? |

update all_whitespace to handle other whitespace characters not caputred in \s non breaking space em-space en-space thin space Add test

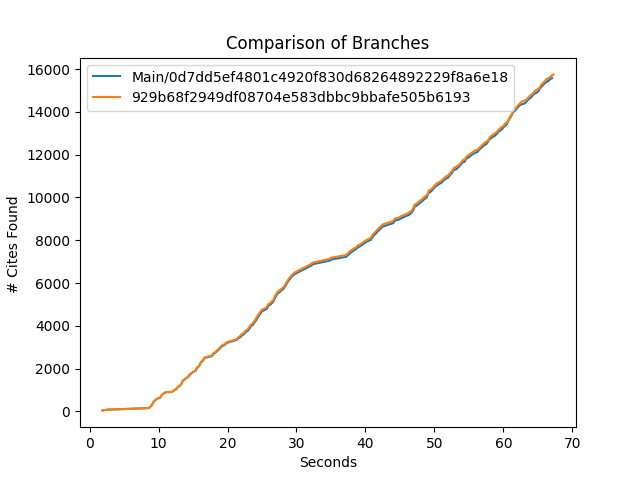

The Eyecite Report 👁️Gains and LossesThere were 95 gains and 0 losses. Click here to see details.

Time ChartGenerated Files |

| @@ -78,7 +78,10 @@ def all_whitespace(text: str) -> str: | |||

| Returns: | |||

| Text with collapsed whitespace characters. | |||

| """ | |||

| return re.sub(r"\s+", " ", text) | |||

| WHITESPACE_REGEX = ( | |||

| r"[ \t\n\r\f\v\u00A0\u2002\u2003\u2009\u200B\u202F\u205F]+" | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

the only character in the list that is not included in r"\s" is \u200b, so I would suggest doing

WHITESPACE_REGEX = "[u200b\s]+"

In [18]: [i for i in list("\t\n\r\f\v\u00A0\u2002\u2003\u2009\u200B\u202F\u205F") if not i.isspace()]

Out[18]: ['\u200b']

or

In [26]: re.sub(r"\s+", "", "\t\n\r\f\v\u00A0\u2002\u2003\u2009\u200B\u202F\u205F")

Out[26]: '\u200b'

This

- to be more clear;

- because maybe "\s" contains more characters than what you are listing?

| @@ -466,6 +466,9 @@ def on_match(index, start, end, flags, context): | |||

| start = byte_to_str_offset[start] | |||

| end = byte_to_str_offset[end] | |||

| m = extractor.compiled_regex.match(text[start:end]) | |||

| if not m: | |||

| # skip if re-run regex fails to detect match | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is not expected to be common, right?

Why don't we put a logger.error here, so we can analyze what's going on in the edge cases

| @@ -630,6 +630,12 @@ def test_find_citations(self): | |||

| metadata={'plaintiff': 'Commonwealth', 'defendant': 'Muniz', | |||

| 'court': 'pa'})]), | |||

| ('Foo v. Bar, 1 F.Supp. 1 (SC 1967)', [case_citation(volume='1', reporter='F.Supp.', year=1967, page='1', metadata={'plaintiff': 'Foo', 'defendant': 'Bar', 'court': 'sc'})]), | |||

| ('Shady Grove Farms \xa0v Goldsmith Seeds 1 U.S. 1 (1981)', [ | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

this is an example for the whitespace change; however; this was already covered by the simple r"\s" regex as pointed in the other comment. I think you should try to cover the error cases we have seen. For example would still be broken

In [32]: clean_text(' \x08*\x07\x07\u038bþİ\u038b\u202cڋ\u202a-\x14V\u202c\u202c', ["all_whitespace"])

Out[32]: ' \x08*\x07\x07\u038bþİ\u038b\u202cڋ\u202a-\x14V\u202c\u202c'| @@ -52,7 +52,7 @@ def short_cite_re(regex): | |||

|

|

|||

| # Regex to match punctuation around volume numbers and stopwords. | |||

| # This could potentially be more precise. | |||

| PUNCTUATION_REGEX = r"[^\sa-zA-Z0-9]*" | |||

| PUNCTUATION_REGEX = r"[^\sa-zA-Z0-9]{,3}" | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

why make these changes? do you have examples or comments on why?

| @@ -79,7 +79,7 @@ def short_cite_re(regex): | |||

| ) | |||

|

|

|||

| # Regex for SectionToken | |||

| SECTION_REGEX = r"(\S*§\S*)" | |||

| SECTION_REGEX = space_boundaries_re(r"([\w\.\,\-]*§[\w\.\,\-]*)") | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

escape \ not needed inside []

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

From the benchmark, it seems we are losing these?

§15.50(a)[§]52-249a

Added an

if not m: continuecheck toextract_tokens()to prevent processing invalid matches that fail when

re-running the regex on extracted text.

Previously, Hyperscan detected matches based on byte

offsets, but some of these did not align properly

when converted to Unicode string offsets. This caused

.match(text[start:end])to returnNone,potentially leading to errors when calling

get_token(m, offset=start).Now, we explicitly skip such cases to ensure

only valid tokens are processed.

Also - put some rationale constraints on the section regex

and punctuation regexes to avoid matching indefinitely on gibberish.