-

Notifications

You must be signed in to change notification settings - Fork 17

杜贵旺(hw01、hw02、hw03) #14

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

base: main

Are you sure you want to change the base?

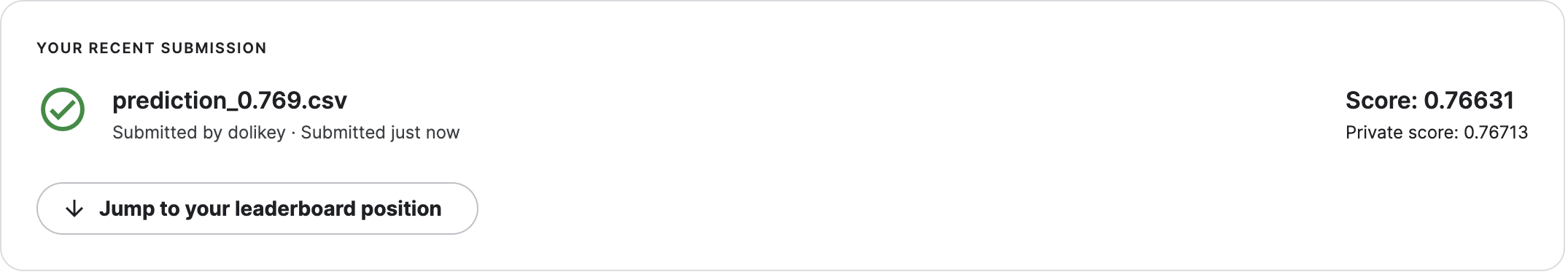

Conversation

hw02kaggle best private score: 0.76713 ● (1%) Simple baseline: 0.45797 (sample code) 默认值: hidden_layers = 1 [005/005] Train Acc: 0.460776 Loss: 1.876422 | Val Acc: 0.457841 loss: 1.889746 实验二: [004/005] Train Acc: 0.494620 Loss: 1.715949 | Val Acc: 0.473995 loss: 1.807472 实验三: [005/005] Train Acc: 0.486311 Loss: 1.749964 | Val Acc: 0.471650 loss: 1.814920 实验四: [003/005] Train Acc: 0.489009 Loss: 1.736357 | Val Acc: 0.470320 loss: 1.820758 结论:新增层数会提高准确率 实验五: [022/030] Train Acc: 0.502249 Loss: 1.686878 | Val Acc: 0.472946 loss: 1.809189 结论:num_epoch可能会继续收敛,但是不用太高收敛还挺快,可以先确定其他参数后面再增大训练次数 实验六: [009/010] Train Acc: 0.763975 Loss: 0.731162 | Val Acc: 0.684132 loss: 1.046159 结论:新增concat_nframes会提高准确率 实验七: [010/010] Train Acc: 0.690315 Loss: 0.986674 | Val Acc: 0.702654 loss: 0.946877 实验八: [010/010] Train Acc: 0.620712 Loss: 1.259031 | Val Acc: 0.666424 loss: 1.080704 估计增大训练次数也可以继续收敛 结论:新增dropout会提高准确率,而且作用很明显(随机屏蔽神经元),够防止过拟合。但是不是越大越好 实验九: [010/010] Train Acc: 0.702750 Loss: 0.925790 | Val Acc: 0.715762 loss: 0.889721 结论:新增batchnorm会提高准确率 实验十: [096/100] Train Acc: 0.767724 Loss: 0.708376 | Val Acc: 0.765344 loss: 0.743654 |

hw03Simple : 0.50099

Medium : 0.73207 Training Augmentation + Train Longer 实验1: 实验2: [ Train | 020/020 ] loss = 1.26153, acc = 0.56024 结论:添加图片变形收敛速度变慢了,而且反而出现了过拟合 实验3: [ Valid | 019/020 ] loss = 1.43384, acc = 0.52173 -> best 结论:合适的图片变形会增加识别率 实验4: train_tfm = tf.Compose([ 结论:这种大参数模型收敛更慢了 实验5: 重新设计了输出层,新增了dropout n_epochs = 20重新跑一次 [ Valid | 001/020 ] loss = 0.72903, acc = 0.76770 -> best 结论:好像需要改动测试集才行,现在可以看出来预训练还是很厉害的 实验6: [ Valid | 032/080 ] loss = 2.28870, acc = 0.19313 收敛太慢了 实验7: 比上面的好一些了,但是还是收敛很慢 实验8: 5:30分钟一个epoch [ Train | 001/010 ] loss = 2.31989, acc = 0.14399 结论:收敛稍微快了一些,但是5分钟还是慢了。 实验9: [ Train | 001/3000 ] loss = 2.31403, acc = 0.14575 |

hw01

修改了seed

选取了相关度最高的参数

改了入参个数

改了隐藏层神经元个数

换了一个优化器方法

修改了学习率