NVIDIA-accelerated, deep learned model support for object detection including DetectNet.

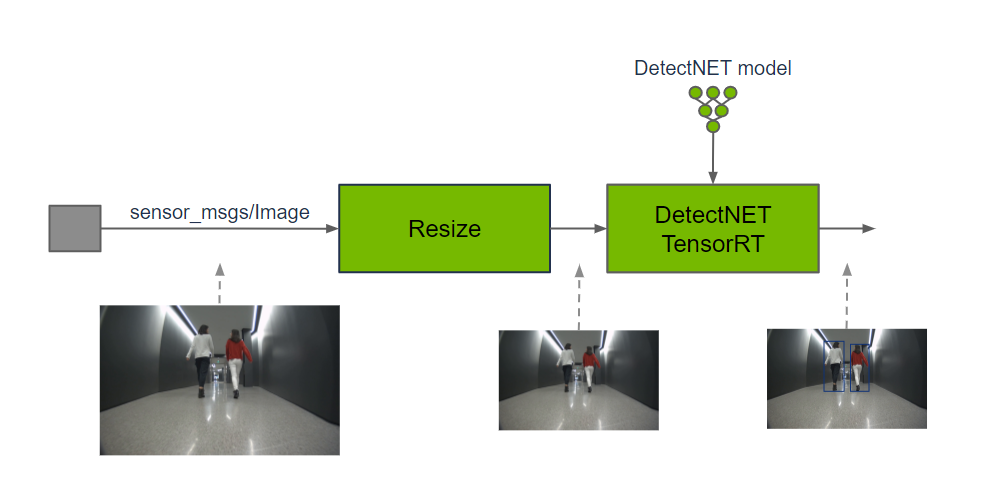

Isaac ROS Object Detection contains ROS 2 packages to perform object

detection.

isaac_ros_rtdetr, isaac_ros_detectnet, and isaac_ros_yolov8 each provide a method for spatial

classification using bounding boxes with an input image. Classification

is performed by a GPU-accelerated model of the appropriate architecture:

isaac_ros_rtdetr: RT-DETR modelsisaac_ros_detectnet: DetectNet modelsisaac_ros_yolov8: YOLOv8 models

The output prediction can be used by perception functions to understand the presence and spatial location of an object in an image.

Each Isaac ROS Object Detection package is used in a graph of nodes to provide a bounding box detection array with object classes from an input image. A trained model of the appropriate architecture is required to produce the detection array.

Input images may need to be cropped and resized to maintain the aspect ratio and match the

input resolution of the specific object detection model; image resolution may be reduced to

improve DNN inference performance, which typically scales directly with

the number of pixels in the image. isaac_ros_dnn_image_encoder

provides DNN encoder utilities to process the input image into Tensors for the

object detection models.

Prediction results are decoded in model-specific ways,

often involving clustering and thresholding to group multiple detections

on the same object and reduce spurious detections.

Output is provided as a detection array with object classes.

DNNs have a minimum number of pixels that need to be visible on the object to provide a classification prediction. If a person cannot see the object in the image, it’s unlikely the DNN will. Reducing input resolution to reduce compute may reduce what is detected in the image. For example, a 1920x1080 image containing a distant person occupying 1k pixels (64x16) would have 0.25K pixels (32x8) when downscaled by 1/2 in both X and Y. The DNN may detect the person with the original input image, which provides 1K pixels for the person, and fail to detect the same person in the downscaled resolution, which only provides 0.25K pixels for the person.

Object detection classifies a rectangle of pixels as containing an object, whereas image segmentation provides more information and uses more compute to produce a classification per pixel. Object detection is used to know if, and where in a 2D image, the object exists. If a 3D spacial understanding or size of an object in pixels is required, use image segmentation.

This package is powered by NVIDIA Isaac Transport for ROS (NITROS), which leverages type adaptation and negotiation to optimize message formats and dramatically accelerate communication between participating nodes.

| Sample Graph |

Input Size |

AGX Orin |

Orin NX |

Orin Nano 8GB |

x86_64 w/ RTX 4060 Ti |

x86_64 w/ RTX 4090 |

|---|---|---|---|---|---|---|

| RT-DETR Object Detection Graph SyntheticaDETR |

720p |

71.9 fps 24 ms @ 30Hz |

30.8 fps 41 ms @ 30Hz |

21.3 fps 61 ms @ 30Hz |

205 fps 8.7 ms @ 30Hz |

400 fps 6.3 ms @ 30Hz |

| DetectNet Object Detection Graph |

544p |

165 fps 20 ms @ 30Hz |

115 fps 26 ms @ 30Hz |

63.2 fps 36 ms @ 30Hz |

488 fps 10 ms @ 30Hz |

589 fps 10 ms @ 30Hz |

Please visit the Isaac ROS Documentation to learn how to use this repository.

Update 2024-09-26: Update for ZED compatibility