Releases: latchbio/latch

v1.14.0

v1.13.0

Improvements to Boilerplate (latch init)

- include

LaunchPlan - use new metadata object

- image faster on rebuild

Documentation improvements

v1.11.0

Create your own Test Data, improved latch ls, crash report...

Test Data (LaunchPlan)

Add a set of default parameter values that someone can use to quickly execute a workflow. Add this to the same file that your workflows and tasks live.

from latch.resources.launch_plan import LaunchPlan

LaunchPlan(

assemble_and_sort, # workflow name

"small test", # name of test data

{"read1": LatchFile("latch:///foobar"), "read2": LatchFile("latch:///foobar")},

)

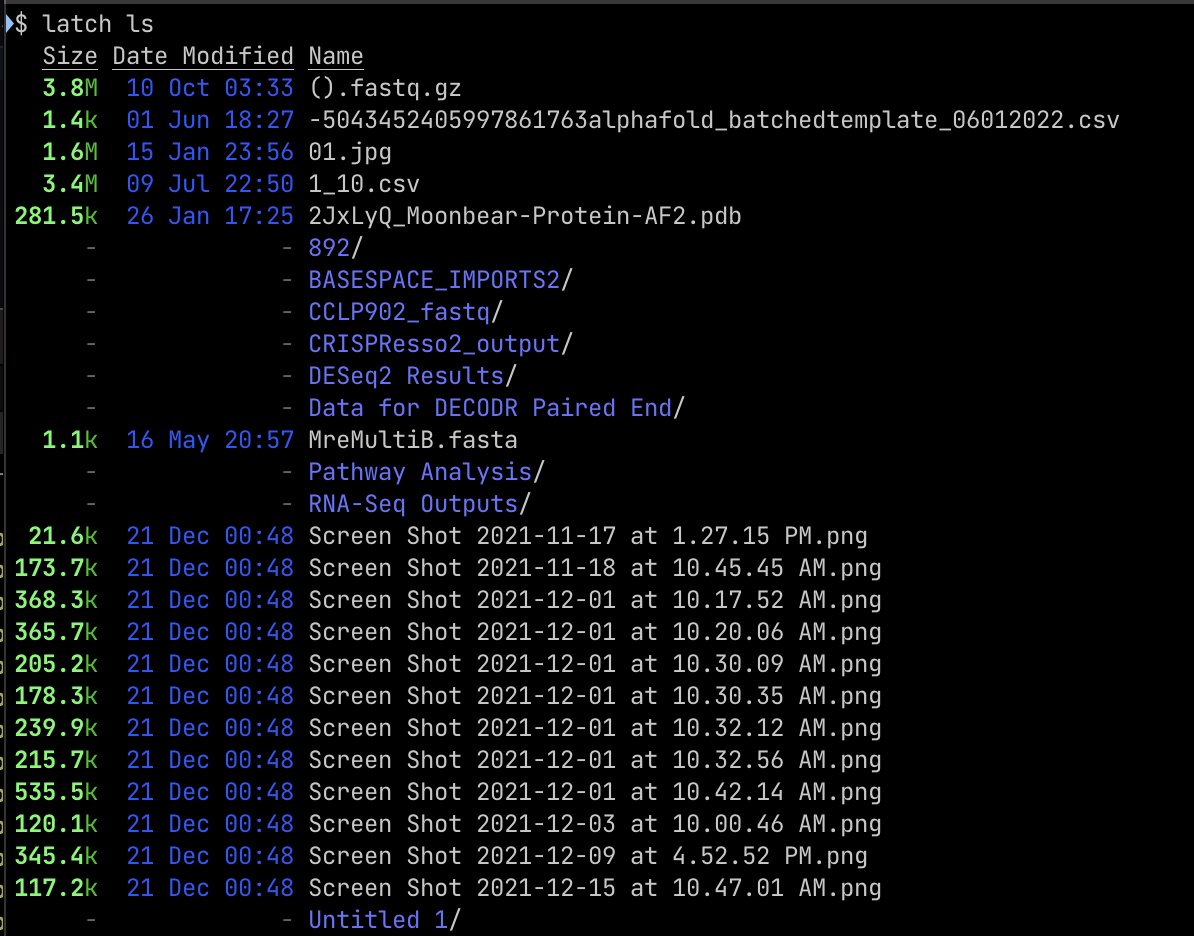

latch ls improvements

Inspired by exa's color scheme:

Crash Report

A tarball named .latch_report.tar.gz will be produced in the working directory of a failed SDK execution. This tarball will have platform information, stacktrace logs and bundled code to reproduce/debug your execution.

v1.10.1

Remote Docker Build

Pass the --remote flag to latch register to build your workflow on a remote machine, saving local resources + download/upload time.

latch register foobar --remote

You no longer need a local Docker daemon with this feature.

v1.9.0: Latch Preview

v1.9.0: latch preview

This update introduces the latch preview command. This command allows the user to see a preview of their parameter interface without going through the hassle of registering, building a docker image, and waiting for an indeterminate amount of time between iterations.

To use it, simply navigate to the top-level workflow directory, and run latch preview <wf-function-name>, where <wf-function-name> is the name of the function under the workflow decorator. This will open the preview in your browser. To see new changes, simply run the command again and refresh the page.

An example user flow for this would be

$ latch init test_workflow

...

$ cd test_workflow/

$ latch preview assemble_and_sort

(opens the preview in a new tab)

v1.8.1

Start/Stop + latch exec fixes

You can now start/stop tasks from the latch console using this latest version. (Additionally ensure you are building tasks with the latest Dockerfile shipped with latch init <> or that procps is installed in your image.)

Additionally, latch exec has proper support for terminal escape codes so programs like htop, vim, etc. will function correctly.

v1.6.1

Caching

You can cache the results of tasks to prevent wasted computation. Tasks that are

identified as "the same" (rigorous criteria to identify cache validity to

follow) can succeed instantly with outputs that were saved from previous

executions.

Caching is very helpful when debugging, sharing tasks between workflows, or

batched executions over similar input data.

Every latch task, eg. small_task, large_gpu_task, has a cachable

equivalent prefixed with cached, eg. cached_small_task.

import time

from latch.resources.tasks import cached_small_task

@cached_small_task("someversion")

def do_sleep(foo: str) -> str:

time.sleep(60)

return foo

When will my tasks get cached?

Tasks are not cached by default as task code has the option of performing side

effects (eg. communicating with servers or uploading files) that would be

destroyed by caching.

Tasks will only receive a cache when using the cached_<> task decorators.

Task cache isolation

Task caches are not shared between accounts or even necessarily between

workflows within the same account. The following is a list of cases where task

caches are guaranteed to be different.

- each latch account will have its own task cache

- each task with a unique name will have its own task cache

- whenever the task function signature changes (name or typing of input or

output parameters) the task will receive a new cache - whenever the task cache version changes, the task will receive a new cache

Why there is a cache version

We require a cache version because we cannot naively assume that the checksum of

a task function's body should be used in our cache key.

This assumes that changing the task body logic will always change the outputs of

the task. There are many changes we can make to the body (eg. print statements)

that will have no effect on the outputs and would cause an expensive cache

invalidate for no reason.

Therefore we are rolling out the initial cached task feature with user specified

cache versions. If this becomes too cumbersome and the vast majority of

re-registers would benefit from an automatic cache invalidate when task body

code changes, we will pin the cache version with a digest of the function body.

v1.5.3

Interactive remote task execution alpha.

Use latch exec <task_name> to receive a local shell into a remote running task. Reach out to the core latch eng team for access to this feature while it is still in alpha.

v1.4.0

Version 1.4.0

This release of the SDK provides two new features, namely messaging and new constructs for workflow metadata.

Messaging

Task executions produce logs, displayed on the Latch console to provide users visibility into their workflows. However, these logs tend to be terribly verbose. It's tedious to sift through piles of logs looking for useful signals; instead, important information, warnings, and errors should be prominently displayed. This is accomplished through the Latch SDK's new messaging feature.

@small_task

def task():

...

try:

...

catch ValueError:

title = 'Invalid sample ID column selected'

body = 'Your file indicates that sample columns a, b are valid'

message(typ='error', data={'title': title, 'body': body})

...The typ parameter affects how your message is styled. It currently accepts three options:

infowarningerror

The data parameter contains the message to be displayed. It's represented as a Python dict and requires two inputs,

title: The title of your messagebody: The contents of your message

For more information, see latch.functions.messages under the API docs.

Docstring Metadata ---> LatchMetadata

The new LatchRule, LatchParameter, LatchAuthor, and LatchMetadata constructs serve as a drop-in replacement for docstring metadata. This is still backwards compatible, but going forward we recommend switching to this new convention.

In previous versions of Latch, a workflow would have to have a specifically formatted docstring that described metadata about itself and its parameters - this approach was error-prone and difficult to use, both because of its somewhat contrived format and because no errors were exposed to the user. Now, to annotate a workflow with metadata, first create a LatchMetadata object like so:

metadata = LatchMetadata(

display_name="My Workflow",

documentation="https://github.com/author/my_workflow/README.md",

author=LatchAuthor(

name="Workflow Author",

email="[email protected]",

github="https://github.com/author",

),

repository="https://github.com/author/my_workflow",

license="MIT",

)this corresponds directly with the following part of the old-style docstring:

__metadata__:

display_name: My Workflow

documentation: https://github.com/author/my_workflow/README.md

author:

name: Workflow Author

email: [email protected]

github: https://github.com/author

repository: https://github.com/author/my_workflow

license:

id: MIT

A LatchMetadata object has an attribute parameters which is a dictionary mapping parameter names to LatchParameter objects. For each parameter of your workflow, create a LatchParameter object and put it in metadata.parameters. For example,

metadata.parameters["param_name"] = LatchParameter(

display_name="Parameter Name",

description="This is a parameter",

hidden=False,

)would create metadata (and a corresponding parameter input in the console) for a parameter called param_name. This would correspond to

@workflow(metadata)

def my_workflow(

param_name: ...

...

):

"""

...

Args:

param_name:

This is a parameter

__metadata__:

display_name: Parameter Name

_tmp:

hidden: True

....

"""in the old docstring. Finally, when all of the parameters have been added, simply pass in the LatchMetadata object to the workflow decorator as below

@workflow(metadata)

def my_workflow(

...Using these constructs instead of purely docstring metadata will make development much less error-prone and make interface errors transparent to the user.

One important note however is that this new system does not provide Python representations of the short/long workflow descriptions at the start of the old-style docstring. Instead, those portions will remain in the docstring to properly document the workflow function within itself.

What's Changed

- docs: Fix conditionals example in documentation by @jvfe in #89

- updated README with two examples of latch workflows by @JLSteenwyk in #87

- pin lytekit + lytekitplugin by @kennyworkman in #85

- Rohankan/task execution messages by @rohankan in #90

- feat: initial latch metadata draft + file/directory bug fix by @ayushkamat in #94

New Contributors

- @jvfe made their first contribution in #89

- @JLSteenwyk made their first contribution in #87

- @rohankan made their first contribution in #90

Full Changelog: v1.3.2...v1.4.0

v1.3.2

Bump lytekit dependencies