-

-

-

- +

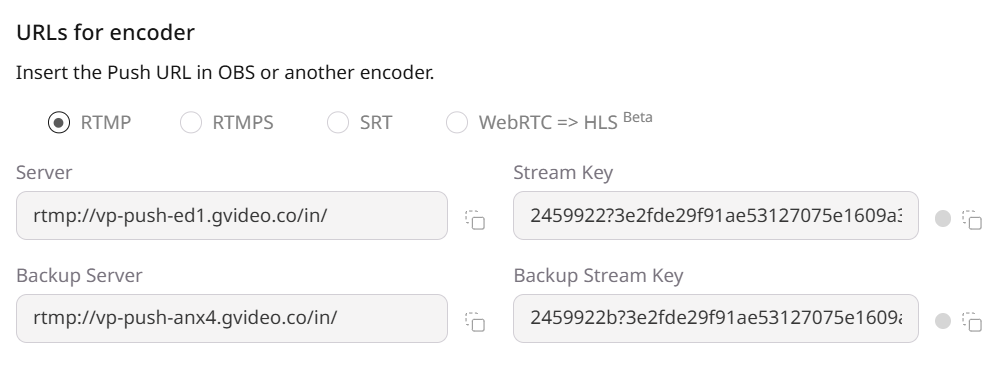

+Concatenate them to form the full RTMP URL for the stream:

+

+ rtmp://vp-push-ix1.gvideo.co/in/400448?cdf2a7ccf990e464c2b…

+

+3\. Open the command line interface (CLI) on your device and run the following command:

+

+ ffmpeg -f {input format params} -f flv {RTMP URL}

+

+## Configure the stream parameters for optimal performance

+

+To ensure optimal streaming performance, we recommend configuring the stream parameters you will send to our server. You can adjust these settings via the CLI parameters of FFmpeg.

+

+Example of a command line for streaming via FFmpeg with the recommended parameters:

+

+ ffmpeg -f {input format params} \

+ -c:v libx264 -preset veryfast -b:v 2000000 \

+ -profile:v baseline -vf format=yuv420p \

+ -crf 23 -g 60 \

+ -b:a 128k -ar 44100 -ac 2 \

+ -f flv {RTMP URL}

+

+### Output parameters

+

+- **Video Bitrate:** To stream at 720p resolution, set the bitrate to 2000Kbps (`-b:v 2000000`). If you’re broadcasting at 1080p, set the bitrate to 4000Kbps (`-b:v 4000000`).

+- **Audio Bitrate:** 128 (`-b:a 128k`).

+- **Encoder:** Software (`-c:v libx264`), or any other H264 codec.

+- **Rate control:** CRF (`-crf 23`)

+- **Keyframe Interval:** 2s (`-g 60`).

+- **CPU Usage Preset:** veryfast (`-preset veryfast`).

+- **Profile:** baseline (`-profile:v baseline -vf format=yuv420p`)

+

+### Audio parameters

+

+- **Sample Rate**: 44.1 kHz (`-ar 44100`) or 48 kHz (`-ar 48000`).

+- Use **Stereo** for the best sound quality (`-ac 2`).

+

+### Video parameters

+

+If you need to reduce the original resolution (downscale), follow the instructions in this section. If no resolution change is required, you can skip this step.

+

+- **Output (Scaled) Resolution:** 1280×720

+- **Downscale Filter:** Bicubic

+- **Common FPS Values:** 30

diff --git a/documentation/streaming-platform/live-streaming/broadcasting-software/larix.md b/documentation/streaming-platform/live-streaming/broadcasting-software/larix.md

new file mode 100644

index 000000000..36a3c7f7a

--- /dev/null

+++ b/documentation/streaming-platform/live-streaming/broadcasting-software/larix.md

@@ -0,0 +1,69 @@

+---

+title: larix

+displayName: Larix (Android/iOS)

+published: true

+order: 20

+pageTitle: Live Stream Setup with Larix | Gcore

+pageDescription: A step-by-step guide to pushing live streams via Larix.

+---

+

+# Larix

+

+Larix is a free encoder for video recording, screencasting, and live streaming. It’s suitable for video game streaming, blogging, educational content, and more.

+

+Larix links your mobile device (e.g., a smartphone or a tablet) to different streaming platforms (e.g., Gcore Video Streaming, YouTube, Twitch, etc.). It takes an image captured by a camera, converts it into a video stream, and then sends it to the streaming platform.

+

+## Setup

+

+1\. Install Larix on your mobile device. You find the download instructions on the official website.

+

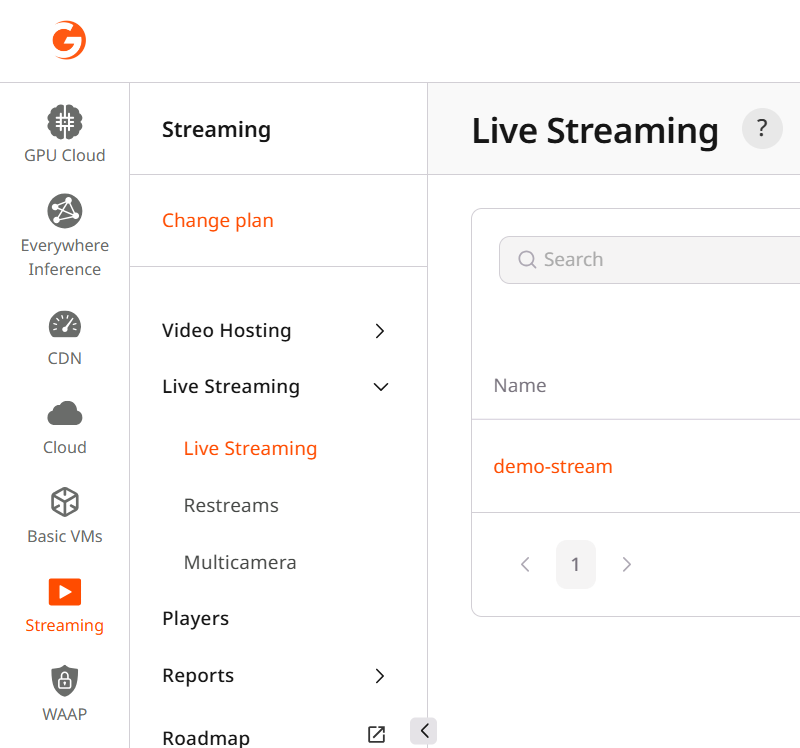

+2\. To get the server URL and stream key, go to the Streaming list, open the **Live stream settings** you need, and copy the relevant value from the **URLs for the encoder** section.

+

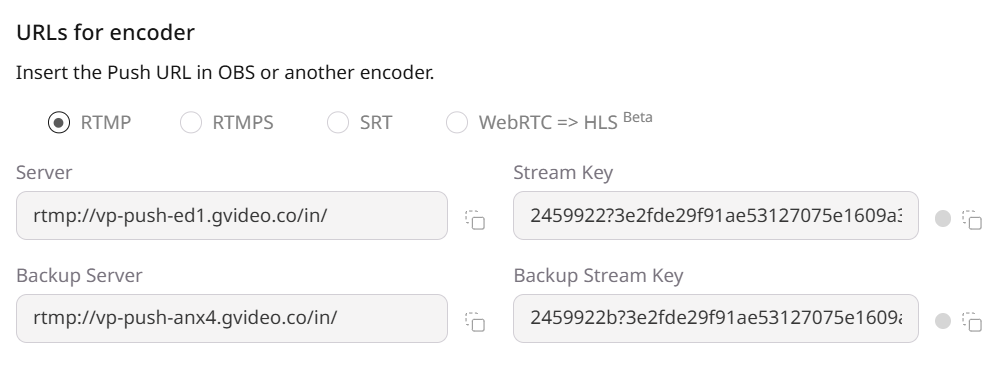

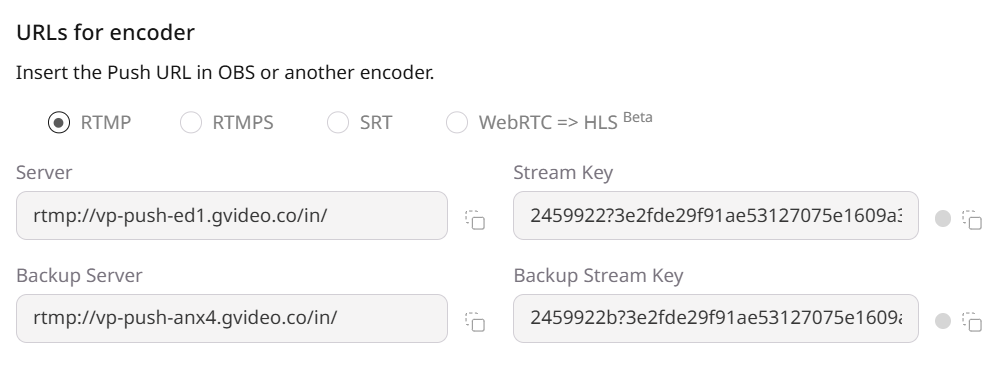

+For example, if you see these values on the **Live stream settings** page:

+

+

+

+Concatenate them to form the full RTMP URL for the stream:

+

+ rtmp://vp-push-ix1.gvideo.co/in/400448?cdf2a7ccf990e464c2b…

+

+3\. Open the command line interface (CLI) on your device and run the following command:

+

+ ffmpeg -f {input format params} -f flv {RTMP URL}

+

+## Configure the stream parameters for optimal performance

+

+To ensure optimal streaming performance, we recommend configuring the stream parameters you will send to our server. You can adjust these settings via the CLI parameters of FFmpeg.

+

+Example of a command line for streaming via FFmpeg with the recommended parameters:

+

+ ffmpeg -f {input format params} \

+ -c:v libx264 -preset veryfast -b:v 2000000 \

+ -profile:v baseline -vf format=yuv420p \

+ -crf 23 -g 60 \

+ -b:a 128k -ar 44100 -ac 2 \

+ -f flv {RTMP URL}

+

+### Output parameters

+

+- **Video Bitrate:** To stream at 720p resolution, set the bitrate to 2000Kbps (`-b:v 2000000`). If you’re broadcasting at 1080p, set the bitrate to 4000Kbps (`-b:v 4000000`).

+- **Audio Bitrate:** 128 (`-b:a 128k`).

+- **Encoder:** Software (`-c:v libx264`), or any other H264 codec.

+- **Rate control:** CRF (`-crf 23`)

+- **Keyframe Interval:** 2s (`-g 60`).

+- **CPU Usage Preset:** veryfast (`-preset veryfast`).

+- **Profile:** baseline (`-profile:v baseline -vf format=yuv420p`)

+

+### Audio parameters

+

+- **Sample Rate**: 44.1 kHz (`-ar 44100`) or 48 kHz (`-ar 48000`).

+- Use **Stereo** for the best sound quality (`-ac 2`).

+

+### Video parameters

+

+If you need to reduce the original resolution (downscale), follow the instructions in this section. If no resolution change is required, you can skip this step.

+

+- **Output (Scaled) Resolution:** 1280×720

+- **Downscale Filter:** Bicubic

+- **Common FPS Values:** 30

diff --git a/documentation/streaming-platform/live-streaming/broadcasting-software/larix.md b/documentation/streaming-platform/live-streaming/broadcasting-software/larix.md

new file mode 100644

index 000000000..36a3c7f7a

--- /dev/null

+++ b/documentation/streaming-platform/live-streaming/broadcasting-software/larix.md

@@ -0,0 +1,69 @@

+---

+title: larix

+displayName: Larix (Android/iOS)

+published: true

+order: 20

+pageTitle: Live Stream Setup with Larix | Gcore

+pageDescription: A step-by-step guide to pushing live streams via Larix.

+---

+

+# Larix

+

+Larix is a free encoder for video recording, screencasting, and live streaming. It’s suitable for video game streaming, blogging, educational content, and more.

+

+Larix links your mobile device (e.g., a smartphone or a tablet) to different streaming platforms (e.g., Gcore Video Streaming, YouTube, Twitch, etc.). It takes an image captured by a camera, converts it into a video stream, and then sends it to the streaming platform.

+

+## Setup

+

+1\. Install Larix on your mobile device. You find the download instructions on the official website.

+

+2\. To get the server URL and stream key, go to the Streaming list, open the **Live stream settings** you need, and copy the relevant value from the **URLs for the encoder** section.

+

+For example, if you see these values on the **Live stream settings** page:

+

+ +

+Concatenate them to form the full RTMP URL for the stream:

+

+ rtmp://vp-push-ix1.gvideo.co/in/400448?cdf2a7ccf990e464c2b…

+

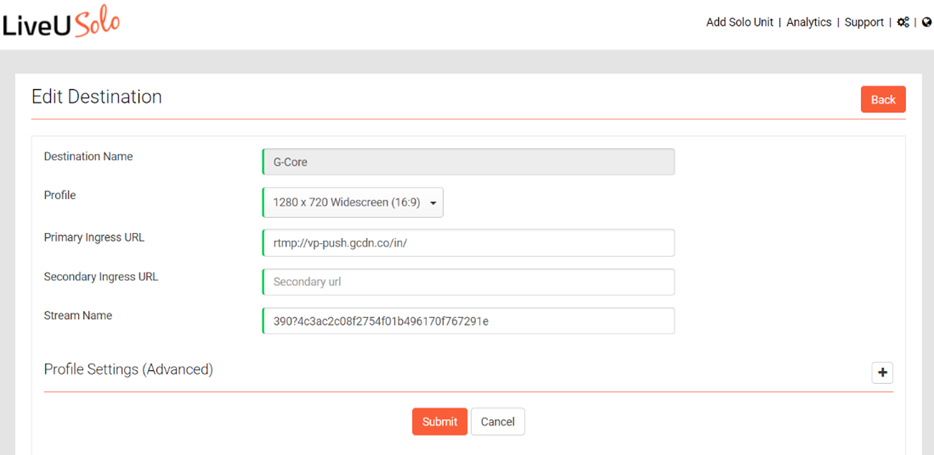

+3\. Open Larix Grove, where you can create the configuration for the Larix app that you can share via QR code.

+4\. In Larix Grove, scroll down to the **Connection** section.

+5\. Enter the RTMP URL and a name for your connection.

+6\. Click the QR-Code button to generate a QR code. You can scan this code with the Larix app on your mobile device to automatically configure the connection.

+7\. Open the Larix app on your mobile device and tap the gear icon to open the settings.

+8\. Tap **Larix Grove** and then tap **Scan Grove QR code**.

+9\. Scan the QR code you generated in Larix Grove. The app will automatically configure the connection.

+10\. Go back to the main screen of the Larix app and tap the big white button to start streaming.

+

+## Configure the stream parameters for optimal performance

+

+To ensure optimal streaming performance, we recommend configuring the stream parameters you will send to our server.

+

+You can adjust these settings with Larix Grove, where the configuration together with the connection URL is generated as a QR code. This allows you to easily share the configuration with team members.

+

+After you changed the settings, click the **QR-Code** button to generate a new QR code for sharing.

+

+

+

+Concatenate them to form the full RTMP URL for the stream:

+

+ rtmp://vp-push-ix1.gvideo.co/in/400448?cdf2a7ccf990e464c2b…

+

+3\. Open Larix Grove, where you can create the configuration for the Larix app that you can share via QR code.

+4\. In Larix Grove, scroll down to the **Connection** section.

+5\. Enter the RTMP URL and a name for your connection.

+6\. Click the QR-Code button to generate a QR code. You can scan this code with the Larix app on your mobile device to automatically configure the connection.

+7\. Open the Larix app on your mobile device and tap the gear icon to open the settings.

+8\. Tap **Larix Grove** and then tap **Scan Grove QR code**.

+9\. Scan the QR code you generated in Larix Grove. The app will automatically configure the connection.

+10\. Go back to the main screen of the Larix app and tap the big white button to start streaming.

+

+## Configure the stream parameters for optimal performance

+

+To ensure optimal streaming performance, we recommend configuring the stream parameters you will send to our server.

+

+You can adjust these settings with Larix Grove, where the configuration together with the connection URL is generated as a QR code. This allows you to easily share the configuration with team members.

+

+After you changed the settings, click the **QR-Code** button to generate a new QR code for sharing.

+

+ +

+### Camera parameters

+

+If you need to reduce the original resolution (downscale), follow the instructions in this section.

+

+If you need to increase the FPS to 60, make sure to also increase the bitrate accordingly for optimal stream quality (i.e., double it).

+

+If no resolution change is required, you can skip this step.

+

+- **Resolution:** 1280×720

+- **Frame rate:** 30

+

+### Video encoder parameters

+

+- **Video Bitrate:** 2000000 for 720p resolution or 4000000 for 1080p resolution.

+- **Keyframe Interval:** 60 (i.e., 2 seconds)

+

+### Audio encoder parameters

+

+- **Audio Bitrate:** 128000

+- **Sample Rate**: 44100 or 48000

+- **Channels**: 2

diff --git a/documentation/streaming-platform/live-streaming/broadcasting-software/metadata.md b/documentation/streaming-platform/live-streaming/broadcasting-software/metadata.md

new file mode 100644

index 000000000..e69de29bb

diff --git a/documentation/streaming-platform/live-streaming/push-live-streams-software/push-live-streams-via-obs.md b/documentation/streaming-platform/live-streaming/broadcasting-software/obs.md

similarity index 79%

rename from documentation/streaming-platform/live-streaming/push-live-streams-software/push-live-streams-via-obs.md

rename to documentation/streaming-platform/live-streaming/broadcasting-software/obs.md

index 3cdeaa4c6..a41cb9476 100644

--- a/documentation/streaming-platform/live-streaming/push-live-streams-software/push-live-streams-via-obs.md

+++ b/documentation/streaming-platform/live-streaming/broadcasting-software/obs.md

@@ -1,27 +1,19 @@

---

-title: push-live-streams-via-obs

-displayName: OBS (Open Broadcaster Software)

+title: obs

+displayName: OBS

published: true

-order: 10

-toc:

- --1--What is an OBS?: "what-is-an-obs"

- --1--Configure: "configure-the-obs-encoder-for-gcore-streaming"

- --1--Manage the stream: "manage-the-stream-parameters"

- --2--Output: "output-parameters"

- --2--Audio: "audio-parameters"

- --2--Video: "video-parameters"

+order: 30

pageTitle: Live Stream Setup with OBS | Gcore

pageDescription: A step-by-step guide to pushing live streams via Open Broadcaster Software (OBS).

---

-# Push live streams via OBS

-## What is an OBS?

+# Open Broadcaster Software

Open Broadcaster Software (OBS) is a free and open-source encoder for video recording, screencasting, and live streaming. It’s suitable for video game streaming, blogging, educational content, and more.

OBS links your device (a laptop or a PC) and different streaming platforms (Gcore Video Streaming, YouTube, Twitch, etc.). It takes an image captured by a camera, converts it into a video stream, and then sends it to the streaming platform.

-## Configure the OBS encoder for Gcore Streaming

+## Setup

1\. Download Open Broadcaster Software (OBS) from the official website and install it.

@@ -39,8 +31,8 @@ For example, if you see these values on the Live stream settings page:

paste them to the OBS Settings as follows:

-- *rtmp://vp-push-ix1.gvideo.co/in/* is the Server;

-- *400448?cdf2a7ccf990e464c2b…* is the Stream Key.

+- _rtmp://vp-push-ix1.gvideo.co/in/_ is the Server;

+- _400448?cdf2a7ccf990e464c2b…_ is the Stream Key.

5\. Click the **Apply** button to save the new configuration.

@@ -54,7 +46,7 @@ paste them to the OBS Settings as follows:

That’s it. The stream from OBS will be broadcast to your website.

-## Manage the stream parameters

+## Configure the stream parameters for optimal performance

To ensure optimal streaming performance, we recommend configuring the stream parameters you will send to our server. You can adjust these settings in the Output, Audio, and Video tabs within OBS.

@@ -64,9 +56,9 @@ To ensure optimal streaming performance, we recommend configuring the stream par

2\. Set the parameters as follows:

-- **Video Bitrate:** The resolution of your stream determines the required bitrate: The higher the resolution, the higher the bitrate. To stream at 720p resolution, set the bitrate to 2000Kbps. If you’re broadcasting at 1080p, set the bitrate to 4000Kbps.

-- **Audio Bitrate:** 128.

-- **Encoder:** Software (x264), or any other H264 codec.

+- **Video Bitrate:** The resolution of your stream determines the required bitrate: The higher the resolution, the higher the bitrate. To stream at 720p resolution, set the bitrate to 2000Kbps. If you’re broadcasting at 1080p, set the bitrate to 4000Kbps.

+- **Audio Bitrate:** 128.

+- **Encoder:** Software (x264), or any other H264 codec.

+

+### Camera parameters

+

+If you need to reduce the original resolution (downscale), follow the instructions in this section.

+

+If you need to increase the FPS to 60, make sure to also increase the bitrate accordingly for optimal stream quality (i.e., double it).

+

+If no resolution change is required, you can skip this step.

+

+- **Resolution:** 1280×720

+- **Frame rate:** 30

+

+### Video encoder parameters

+

+- **Video Bitrate:** 2000000 for 720p resolution or 4000000 for 1080p resolution.

+- **Keyframe Interval:** 60 (i.e., 2 seconds)

+

+### Audio encoder parameters

+

+- **Audio Bitrate:** 128000

+- **Sample Rate**: 44100 or 48000

+- **Channels**: 2

diff --git a/documentation/streaming-platform/live-streaming/broadcasting-software/metadata.md b/documentation/streaming-platform/live-streaming/broadcasting-software/metadata.md

new file mode 100644

index 000000000..e69de29bb

diff --git a/documentation/streaming-platform/live-streaming/push-live-streams-software/push-live-streams-via-obs.md b/documentation/streaming-platform/live-streaming/broadcasting-software/obs.md

similarity index 79%

rename from documentation/streaming-platform/live-streaming/push-live-streams-software/push-live-streams-via-obs.md

rename to documentation/streaming-platform/live-streaming/broadcasting-software/obs.md

index 3cdeaa4c6..a41cb9476 100644

--- a/documentation/streaming-platform/live-streaming/push-live-streams-software/push-live-streams-via-obs.md

+++ b/documentation/streaming-platform/live-streaming/broadcasting-software/obs.md

@@ -1,27 +1,19 @@

---

-title: push-live-streams-via-obs

-displayName: OBS (Open Broadcaster Software)

+title: obs

+displayName: OBS

published: true

-order: 10

-toc:

- --1--What is an OBS?: "what-is-an-obs"

- --1--Configure: "configure-the-obs-encoder-for-gcore-streaming"

- --1--Manage the stream: "manage-the-stream-parameters"

- --2--Output: "output-parameters"

- --2--Audio: "audio-parameters"

- --2--Video: "video-parameters"

+order: 30

pageTitle: Live Stream Setup with OBS | Gcore

pageDescription: A step-by-step guide to pushing live streams via Open Broadcaster Software (OBS).

---

-# Push live streams via OBS

-## What is an OBS?

+# Open Broadcaster Software

Open Broadcaster Software (OBS) is a free and open-source encoder for video recording, screencasting, and live streaming. It’s suitable for video game streaming, blogging, educational content, and more.

OBS links your device (a laptop or a PC) and different streaming platforms (Gcore Video Streaming, YouTube, Twitch, etc.). It takes an image captured by a camera, converts it into a video stream, and then sends it to the streaming platform.

-## Configure the OBS encoder for Gcore Streaming

+## Setup

1\. Download Open Broadcaster Software (OBS) from the official website and install it.

@@ -39,8 +31,8 @@ For example, if you see these values on the Live stream settings page:

paste them to the OBS Settings as follows:

-- *rtmp://vp-push-ix1.gvideo.co/in/* is the Server;

-- *400448?cdf2a7ccf990e464c2b…* is the Stream Key.

+- _rtmp://vp-push-ix1.gvideo.co/in/_ is the Server;

+- _400448?cdf2a7ccf990e464c2b…_ is the Stream Key.

5\. Click the **Apply** button to save the new configuration.

@@ -54,7 +46,7 @@ paste them to the OBS Settings as follows:

That’s it. The stream from OBS will be broadcast to your website.

-## Manage the stream parameters

+## Configure the stream parameters for optimal performance

To ensure optimal streaming performance, we recommend configuring the stream parameters you will send to our server. You can adjust these settings in the Output, Audio, and Video tabs within OBS.

@@ -64,9 +56,9 @@ To ensure optimal streaming performance, we recommend configuring the stream par

2\. Set the parameters as follows:

-- **Video Bitrate:** The resolution of your stream determines the required bitrate: The higher the resolution, the higher the bitrate. To stream at 720p resolution, set the bitrate to 2000Kbps. If you’re broadcasting at 1080p, set the bitrate to 4000Kbps.

-- **Audio Bitrate:** 128.

-- **Encoder:** Software (x264), or any other H264 codec.

+- **Video Bitrate:** The resolution of your stream determines the required bitrate: The higher the resolution, the higher the bitrate. To stream at 720p resolution, set the bitrate to 2000Kbps. If you’re broadcasting at 1080p, set the bitrate to 4000Kbps.

+- **Audio Bitrate:** 128.

+- **Encoder:** Software (x264), or any other H264 codec.

@@ -74,10 +66,10 @@ To ensure optimal streaming performance, we recommend configuring the stream par

4\. Set the parameters as follows:

-- **Rate control:** CRF (the default value is 23)

-- **Keyframe Interval (0=auto):** 2s

-- **CPU Usage Preset:** veryfast

-- **Profile:** baseline

+- **Rate control:** CRF (the default value is 23)

+- **Keyframe Interval (0=auto):** 2s

+- **CPU Usage Preset:** veryfast

+- **Profile:** baseline

5\. Click **Apply** to save the configuration.

@@ -101,9 +93,9 @@ If you need to reduce the original resolution (downscale), follow the instructio

2\. Set the following parameters:

-- **Output (Scaled) Resolution:** 1280×720

-- **Downscale Filter:** Bicubic

-- **Common FPS Values:** 30

+- **Output (Scaled) Resolution:** 1280×720

+- **Downscale Filter:** Bicubic

+- **Common FPS Values:** 30

3\. Click **Apply**.

diff --git a/documentation/streaming-platform/live-streaming/create-a-live-stream.md b/documentation/streaming-platform/live-streaming/create-a-live-stream.md

index 7961f5233..2c740925c 100644

--- a/documentation/streaming-platform/live-streaming/create-a-live-stream.md

+++ b/documentation/streaming-platform/live-streaming/create-a-live-stream.md

@@ -4,24 +4,22 @@ displayName: Create a live stream

published: true

order: 10

toc:

- --1--1. Initiate process: "initiate-the-process"

- --1--2. Set type and features: "step-2-set-the-stream-type-and-additional-features"

- --1--3. Configure push, pull, or WebRTC to HLSl: "step-3-configure-your-stream-for-push-pull-or-webrtc-to-hls"

- --2--Push ingest type: "push-ingest-type"

- --2--Pull ingest type : "pull-ingest-type"

- --2--WebRTC to HLS ingest type: "webrtc-to-hls-ingest-type"

- --1--4. Start stream: "step-4-start-the-stream"

- --1--5. Embed to app: "step-5-embed-the-stream-to-your-app"

+ --1--1. Initiate process: 'initiate-the-process'

+ --1--2. Set type and features: 'step-2-set-the-stream-type-and-additional-features'

+ --1--3. Configure ingest type and additional features: 'step-3-configure-ingest-type-and-additional-features'

+ --1--4. Start stream: 'step-4-start-the-stream'

+ --1--5. Embed to app: 'step-5-embed-the-stream-to-your-app'

pageTitle: Guide to Creating Live Streams | Gcore

pageDescription: A step-by-step tutorial on how to create live streams using Gcore's interface. Learn about stream types, encoder settings, and embedding options.

---

+

# Create a live stream

## Step 1. Initiate the process

-1\. In the Gcore Customer Portal, navigate to Streaming > **Live Streaming**.

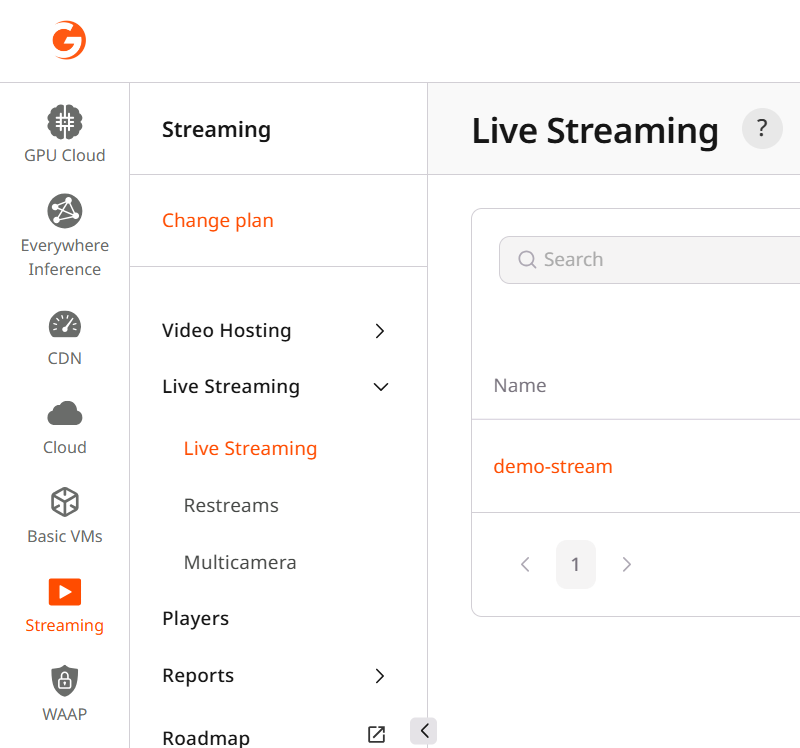

+1\. In the **Gcore Customer Portal**, navigate to **Streaming** > **Live Streaming**.

-2\. Click **Create Live stream**.

+2\. Click the **Create Live stream** button on the top right.

@@ -74,10 +66,10 @@ To ensure optimal streaming performance, we recommend configuring the stream par

4\. Set the parameters as follows:

-- **Rate control:** CRF (the default value is 23)

-- **Keyframe Interval (0=auto):** 2s

-- **CPU Usage Preset:** veryfast

-- **Profile:** baseline

+- **Rate control:** CRF (the default value is 23)

+- **Keyframe Interval (0=auto):** 2s

+- **CPU Usage Preset:** veryfast

+- **Profile:** baseline

5\. Click **Apply** to save the configuration.

@@ -101,9 +93,9 @@ If you need to reduce the original resolution (downscale), follow the instructio

2\. Set the following parameters:

-- **Output (Scaled) Resolution:** 1280×720

-- **Downscale Filter:** Bicubic

-- **Common FPS Values:** 30

+- **Output (Scaled) Resolution:** 1280×720

+- **Downscale Filter:** Bicubic

+- **Common FPS Values:** 30

3\. Click **Apply**.

diff --git a/documentation/streaming-platform/live-streaming/create-a-live-stream.md b/documentation/streaming-platform/live-streaming/create-a-live-stream.md

index 7961f5233..2c740925c 100644

--- a/documentation/streaming-platform/live-streaming/create-a-live-stream.md

+++ b/documentation/streaming-platform/live-streaming/create-a-live-stream.md

@@ -4,24 +4,22 @@ displayName: Create a live stream

published: true

order: 10

toc:

- --1--1. Initiate process: "initiate-the-process"

- --1--2. Set type and features: "step-2-set-the-stream-type-and-additional-features"

- --1--3. Configure push, pull, or WebRTC to HLSl: "step-3-configure-your-stream-for-push-pull-or-webrtc-to-hls"

- --2--Push ingest type: "push-ingest-type"

- --2--Pull ingest type : "pull-ingest-type"

- --2--WebRTC to HLS ingest type: "webrtc-to-hls-ingest-type"

- --1--4. Start stream: "step-4-start-the-stream"

- --1--5. Embed to app: "step-5-embed-the-stream-to-your-app"

+ --1--1. Initiate process: 'initiate-the-process'

+ --1--2. Set type and features: 'step-2-set-the-stream-type-and-additional-features'

+ --1--3. Configure ingest type and additional features: 'step-3-configure-ingest-type-and-additional-features'

+ --1--4. Start stream: 'step-4-start-the-stream'

+ --1--5. Embed to app: 'step-5-embed-the-stream-to-your-app'

pageTitle: Guide to Creating Live Streams | Gcore

pageDescription: A step-by-step tutorial on how to create live streams using Gcore's interface. Learn about stream types, encoder settings, and embedding options.

---

+

# Create a live stream

## Step 1. Initiate the process

-1\. In the Gcore Customer Portal, navigate to Streaming > **Live Streaming**.

+1\. In the **Gcore Customer Portal**, navigate to **Streaming** > **Live Streaming**.

-2\. Click **Create Live stream**.

+2\. Click the **Create Live stream** button on the top right.

@@ -31,13 +29,13 @@ If the button is non-responsive, you have exceeded your live stream limit. To cr

-2\. Enter the name of your live stream in the window that appears and click **Create**.

+2\. Enter the name of your live stream in the window that appears and click the **Create** button.

@@ -31,13 +29,13 @@ If the button is non-responsive, you have exceeded your live stream limit. To cr

-2\. Enter the name of your live stream in the window that appears and click **Create**.

+2\. Enter the name of your live stream in the window that appears and click the **Create** button.

-A new page will appear. Perform the remaining steps there.

+A new page will appear. Perform the remaining steps there.

-## Step 2. Set the stream type and additional features

+## Step 2. Set the ingest type and additional features

-A new page will appear. Perform the remaining steps there.

+A new page will appear. Perform the remaining steps there.

-## Step 2. Set the stream type and additional features

+## Step 2. Set the ingest type and additional features

@@ -49,92 +47,38 @@ By default, we offer live streams with low latency (a 4–5 second delay.) Low l

-2\. (Optional) Review the live stream name and update it if needed.

-

-3\. Enable additional features If you activated them previously:

-

-* Record for live stream recording. It will be active when you start streaming. Remember to enable the toggle if you require a record of your stream.

-* DVR for an improved user experience. When the DVR feature is enabled, your viewers can pause and rewind the broadcast.

-

-4\. Select the relevant stream type: **Push**, **Pull**, or **WebRTC => HLS**.

-

-* Choose **Push** if you don't use your own media server. Establish the URL of our server and the unique stream key in your encoder (e.g. OBS, Streamlabs, vMix, or LiveU Solo). You can use protocols RTMP, RTMPS, and SRT too. The live stream will operate on our server, will be converted to MPEG-DASH and HLS protocols, and will be distributed to end users via our CDN.

-

-* Choose **Pull** if you have a streaming media server. The live stream will operate on your server. Our server will convert it from the RTMP, RTMPS, SRT, or other protocols to MPEG-DASH and HLS protocols. Then, our CDN will distribute the original live stream in the new format to end users.

-

-* Choose **WebRTC => HLS** if you want to convert your live video stream from WebRTC to HLS (HTTP Live Streaming) and DASH (Dynamic Adaptive Streaming over HTTP) formats.

-

-## Step 3. Configure your stream for push, pull, or WebRTC to HLS

-

-### Push ingest type

-

-1\. Select the protocol for your stream: **RTMP**, **RTMPS**, or **SRT**. The main difference between these protocols is their security levels and ability to handle packet loss.

-

-- RTMP is the standard open-source protocol for live broadcasting over the internet. It supports low latency.

-- RTMPS is a variation of RTMP that incorporates SSL usage.

-- SRT is a protocol designed to transmit data reliably with protection against packet loss.

-

-

@@ -49,92 +47,38 @@ By default, we offer live streams with low latency (a 4–5 second delay.) Low l

-2\. (Optional) Review the live stream name and update it if needed.

-

-3\. Enable additional features If you activated them previously:

-

-* Record for live stream recording. It will be active when you start streaming. Remember to enable the toggle if you require a record of your stream.

-* DVR for an improved user experience. When the DVR feature is enabled, your viewers can pause and rewind the broadcast.

-

-4\. Select the relevant stream type: **Push**, **Pull**, or **WebRTC => HLS**.

-

-* Choose **Push** if you don't use your own media server. Establish the URL of our server and the unique stream key in your encoder (e.g. OBS, Streamlabs, vMix, or LiveU Solo). You can use protocols RTMP, RTMPS, and SRT too. The live stream will operate on our server, will be converted to MPEG-DASH and HLS protocols, and will be distributed to end users via our CDN.

-

-* Choose **Pull** if you have a streaming media server. The live stream will operate on your server. Our server will convert it from the RTMP, RTMPS, SRT, or other protocols to MPEG-DASH and HLS protocols. Then, our CDN will distribute the original live stream in the new format to end users.

-

-* Choose **WebRTC => HLS** if you want to convert your live video stream from WebRTC to HLS (HTTP Live Streaming) and DASH (Dynamic Adaptive Streaming over HTTP) formats.

-

-## Step 3. Configure your stream for push, pull, or WebRTC to HLS

-

-### Push ingest type

-

-1\. Select the protocol for your stream: **RTMP**, **RTMPS**, or **SRT**. The main difference between these protocols is their security levels and ability to handle packet loss.

-

-- RTMP is the standard open-source protocol for live broadcasting over the internet. It supports low latency.

-- RTMPS is a variation of RTMP that incorporates SSL usage.

-- SRT is a protocol designed to transmit data reliably with protection against packet loss.

-

- -

-2\. Copy the relevant data to insert into your encoder.

-

-

-

-2\. Copy the relevant data to insert into your encoder.

-

- -

-

-

- +3\. Enable additional features:

-

+3\. Enable additional features:

- +- RTMP/RTMPS

+- SRT

+- WebRTC to HLS

## Step 4. Start the stream

-Start a live stream on your media server or encoder. You will see a streaming preview on the Gcore Live Stream Settings page if everything is configured correctly.

+Start a live stream on your media server or encoder. You will see a streaming preview on the **Live Stream Settings** page if everything is configured correctly.

## Step 5. Embed the stream to your app

Embed the created live stream into your web app by one of the following methods:

-- Copy the iframe code to embed the live stream within the Gcore built-in player.

-- Copy the export link in a suitable protocol and paste it into your player. Use the **LL-DASH** link if your live stream will be viewed from any device except iOS. Use **LL HLS** for iOS viewing.

+- Copy the iframe code to embed the live stream within the Gcore built-in player.

+- Copy the export link in a suitable protocol and paste it into your player. Use the **LL-DASH** link if your live stream will be viewed from any device except iOS. Use **LL HLS** for iOS viewing.

+- RTMP/RTMPS

+- SRT

+- WebRTC to HLS

## Step 4. Start the stream

-Start a live stream on your media server or encoder. You will see a streaming preview on the Gcore Live Stream Settings page if everything is configured correctly.

+Start a live stream on your media server or encoder. You will see a streaming preview on the **Live Stream Settings** page if everything is configured correctly.

## Step 5. Embed the stream to your app

Embed the created live stream into your web app by one of the following methods:

-- Copy the iframe code to embed the live stream within the Gcore built-in player.

-- Copy the export link in a suitable protocol and paste it into your player. Use the **LL-DASH** link if your live stream will be viewed from any device except iOS. Use **LL HLS** for iOS viewing.

+- Copy the iframe code to embed the live stream within the Gcore built-in player.

+- Copy the export link in a suitable protocol and paste it into your player. Use the **LL-DASH** link if your live stream will be viewed from any device except iOS. Use **LL HLS** for iOS viewing.

@@ -142,6 +86,6 @@ That’s it. Your viewers can see the live stream.

@@ -142,6 +86,6 @@ That’s it. Your viewers can see the live stream.

-For details on how to get the streams via API, check our API documentation.

+For details on how to get the streams via API, check our API documentation.

diff --git a/documentation/streaming-platform/live-streaming/protocols/metadata.md b/documentation/streaming-platform/live-streaming/protocols/metadata.md

new file mode 100644

index 000000000..9f91844ee

--- /dev/null

+++ b/documentation/streaming-platform/live-streaming/protocols/metadata.md

@@ -0,0 +1,6 @@

+---

+title: metadata

+displayName: Protocols

+published: true

+order: 15

+---

diff --git a/documentation/streaming-platform/live-streaming/protocols/rtmp.md b/documentation/streaming-platform/live-streaming/protocols/rtmp.md

new file mode 100644

index 000000000..4ef7827d2

--- /dev/null

+++ b/documentation/streaming-platform/live-streaming/protocols/rtmp.md

@@ -0,0 +1,120 @@

+---

+title: rtmp-rtmps

+displayName: RTMP

+published: true

+order: 10

+pageTitle: Guide to RTMP ingest | Gcore

+pageDescription: A step-by-step tutorial on how to create and stop live streams using Gcore's interface or customer's environment.

+---

+

+# The Real Time Messaging Protocol

+

+The Real Time Messaging Protocol (RTMP) is the most common way to stream to video streaming platforms. Gcore Live Streaming supports both RTMP and RTMPS.

+

+

-For details on how to get the streams via API, check our API documentation.

+For details on how to get the streams via API, check our API documentation.

diff --git a/documentation/streaming-platform/live-streaming/protocols/metadata.md b/documentation/streaming-platform/live-streaming/protocols/metadata.md

new file mode 100644

index 000000000..9f91844ee

--- /dev/null

+++ b/documentation/streaming-platform/live-streaming/protocols/metadata.md

@@ -0,0 +1,6 @@

+---

+title: metadata

+displayName: Protocols

+published: true

+order: 15

+---

diff --git a/documentation/streaming-platform/live-streaming/protocols/rtmp.md b/documentation/streaming-platform/live-streaming/protocols/rtmp.md

new file mode 100644

index 000000000..4ef7827d2

--- /dev/null

+++ b/documentation/streaming-platform/live-streaming/protocols/rtmp.md

@@ -0,0 +1,120 @@

+---

+title: rtmp-rtmps

+displayName: RTMP

+published: true

+order: 10

+pageTitle: Guide to RTMP ingest | Gcore

+pageDescription: A step-by-step tutorial on how to create and stop live streams using Gcore's interface or customer's environment.

+---

+

+# The Real Time Messaging Protocol

+

+The Real Time Messaging Protocol (RTMP) is the most common way to stream to video streaming platforms. Gcore Live Streaming supports both RTMP and RTMPS.

+

+ +

+2\. Click on the stream you want to push to. This will open the **Live Stream Settings**.

+

+

+

+2\. Click on the stream you want to push to. This will open the **Live Stream Settings**.

+

+ +

+3\. Ensure that the **Ingest type** is set to **Push**.

+4\. Ensure that the protocol is set to **RTMP** or **RTMPS** in the **URLs for encoder** section.

+5\. Copy the **Server** URL and **Stream Key** from the **URLs for encoder** section.

+

+

+

+3\. Ensure that the **Ingest type** is set to **Push**.

+4\. Ensure that the protocol is set to **RTMP** or **RTMPS** in the **URLs for encoder** section.

+5\. Copy the **Server** URL and **Stream Key** from the **URLs for encoder** section.

+

+ +

+#### Via the API

+

+You can also obtain the URL and stream key via the Gcore API. They endpoint returns the complete URLs for the default and backup ingest points, as well as the stream key.

+

+Example of the API request:

+

+```http

+GET /streaming/streams/{stream_id}

+```

+

+Example of the API response:

+

+```json

+{

+ "push_url": "rtmp://vp-push-anx2.domain.com/in/123?08cd54f0",

+ "backup_push_url": "rtmp://vp-push-ed1.domain.com/in/123b?08cd54f0",

+ ...

+}

+```

+

+Read more in the API documentation.

+

+## Pull streams

+

+Gcore Video Streaming can pull video data from your external server.

+

+Main rules of pulling:

+

+- The URL of the stream to pull from must be **publicly available** and **return a 200 status** for all requests.

+- You can specify **multiple media servers** (separated with space characters) in the **URL** input field. The maximum length of all URLs is 255 characters and the round robin is used when polling the list of specified servers.

+- If a stream is closed (i.e., its connection is terminated) or there is no video data in the stream for 30 seconds, then the next attempt will be made in the next steps progressively (10s, 30s, 60s, 5min, 10min).

+- The stream will be deactivated after 24 hours of inactivity.

+- If you need to set an allowlist for access to the stream, please contact support to get an up-to-date list of networks.

+

+### Setting up a pull stream

+

+There are two ways to set up a pull stream: via the Gcore Customer Portal or via the API.

+

+#### Via the Gcore Customer Portal

+

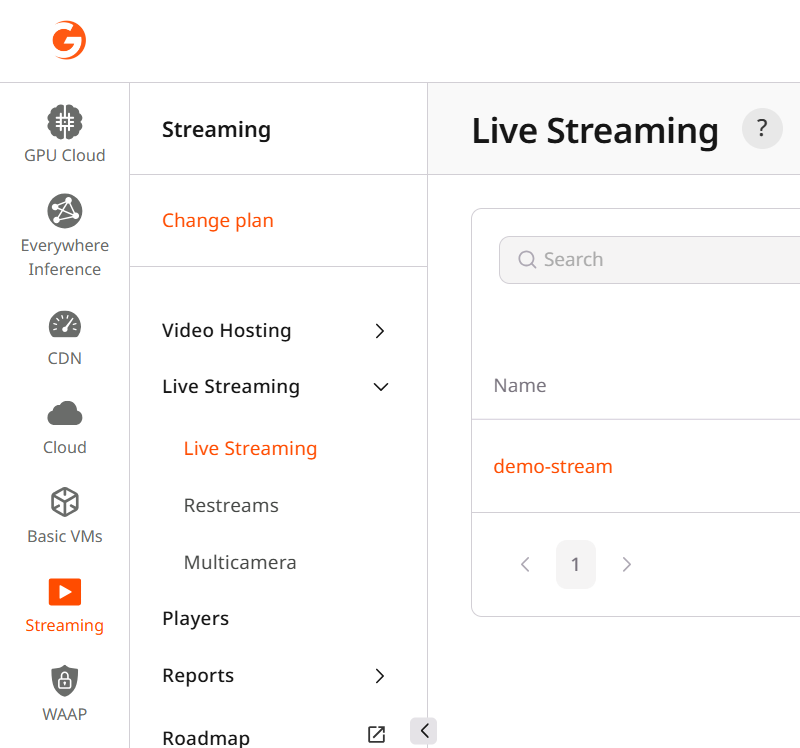

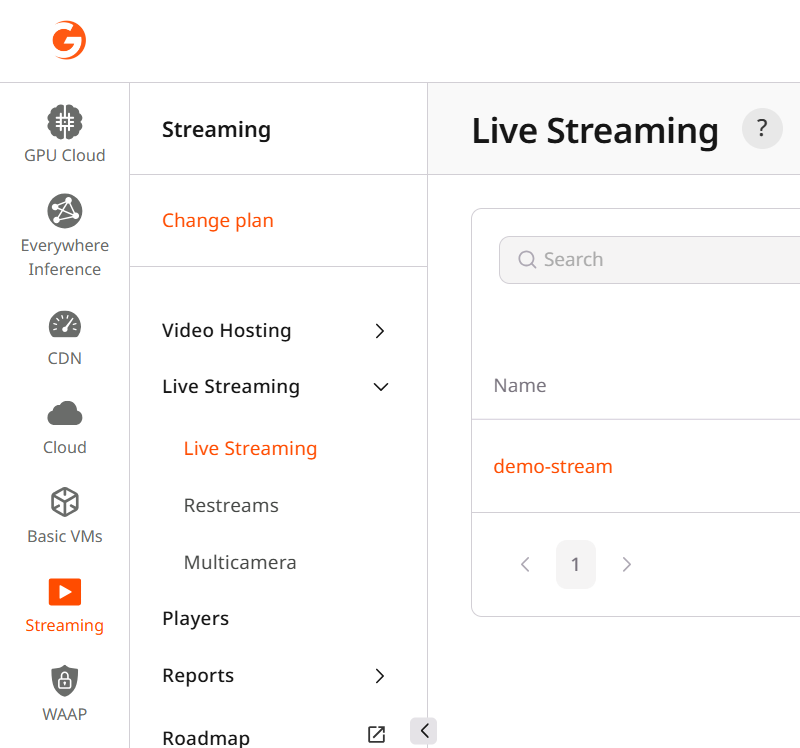

+1\. In the **Gcore Customer Portal**, navigate to **Streaming** > **Live Streaming**.

+

+

+

+#### Via the API

+

+You can also obtain the URL and stream key via the Gcore API. They endpoint returns the complete URLs for the default and backup ingest points, as well as the stream key.

+

+Example of the API request:

+

+```http

+GET /streaming/streams/{stream_id}

+```

+

+Example of the API response:

+

+```json

+{

+ "push_url": "rtmp://vp-push-anx2.domain.com/in/123?08cd54f0",

+ "backup_push_url": "rtmp://vp-push-ed1.domain.com/in/123b?08cd54f0",

+ ...

+}

+```

+

+Read more in the API documentation.

+

+## Pull streams

+

+Gcore Video Streaming can pull video data from your external server.

+

+Main rules of pulling:

+

+- The URL of the stream to pull from must be **publicly available** and **return a 200 status** for all requests.

+- You can specify **multiple media servers** (separated with space characters) in the **URL** input field. The maximum length of all URLs is 255 characters and the round robin is used when polling the list of specified servers.

+- If a stream is closed (i.e., its connection is terminated) or there is no video data in the stream for 30 seconds, then the next attempt will be made in the next steps progressively (10s, 30s, 60s, 5min, 10min).

+- The stream will be deactivated after 24 hours of inactivity.

+- If you need to set an allowlist for access to the stream, please contact support to get an up-to-date list of networks.

+

+### Setting up a pull stream

+

+There are two ways to set up a pull stream: via the Gcore Customer Portal or via the API.

+

+#### Via the Gcore Customer Portal

+

+1\. In the **Gcore Customer Portal**, navigate to **Streaming** > **Live Streaming**.

+

+ +

+2\. Click on the stream you want to pull from. This will open the **Live Stream Settings**.

+

+

+

+2\. Click on the stream you want to pull from. This will open the **Live Stream Settings**.

+

+ +

+3\. Ensure that the **Ingest type** is set to **Pull**.

+4\. In the **URL** field, insert a link to the stream from your media server.

+5\. Click the **Save changes** button on the top right.

+

+#### Via the API

+

+You can also set up a pull stream via the Gcore API. The endpoint accepts the URL of the stream to pull from.

+

+Example of the API request:

+

+```http

+PATCH /streaming/streams/{stream_id}

+```

+

+```json

+{

+ "stream": {

+ "pull": true,

+ "uri": "rtmp://example.com/path/to/stream",

+ ...

+ }

+}

+```

+

+Read more in the API documentation.

diff --git a/documentation/streaming-platform/live-streaming/protocols/srt.md b/documentation/streaming-platform/live-streaming/protocols/srt.md

new file mode 100644

index 000000000..2d1d398d2

--- /dev/null

+++ b/documentation/streaming-platform/live-streaming/protocols/srt.md

@@ -0,0 +1,114 @@

+---

+title: srt

+displayName: SRT

+published: true

+order: 20

+pageTitle: Guide to SRT ingest | Gcore

+pageDescription: A step-by-step tutorial on how to create and stop live streams using Gcore's interface or customer's environment.

+---

+

+# The Secure Reliable Transport Protocol

+

+Secure Reliable Transport (SRT) is an open-source streaming protocol that solves some limits of RTMP delivery. It contrast to RTMP/RTMPS, SRT is a UDP-based protocol that provides low-latency streaming over unpredictable networks. On Gcore Video Streaming, SRT is also require if you want to use the H265/HVEC codec.

+

+## Push streams

+

+Gcore Video Streaming provides you with two endpoints for pushing a stream, a default one and a backup one. The default endpoint is the one that is closest to your location. The backup endpoint is in a different location and used if the default one is unavailable.

+

+By default, Gcore will route your stream to free ingest points with the lowest latency. If you need to set a fixed ingest point or if you need to set the main and backup ingest points in the same region (i.e., to not send streams outside the EU or US), please contact our support team.

+

+### Obtain the server URLs

+

+There are two ways to obtain the SRT server URLs: via the Gcore Customer Portal or via the API.

+

+#### Via the Gcore Customer Portal

+

+1\. In the **Gcore Customer Portal**, navigate to **Streaming** > **Live Streaming**.

+

+

+

+3\. Ensure that the **Ingest type** is set to **Pull**.

+4\. In the **URL** field, insert a link to the stream from your media server.

+5\. Click the **Save changes** button on the top right.

+

+#### Via the API

+

+You can also set up a pull stream via the Gcore API. The endpoint accepts the URL of the stream to pull from.

+

+Example of the API request:

+

+```http

+PATCH /streaming/streams/{stream_id}

+```

+

+```json

+{

+ "stream": {

+ "pull": true,

+ "uri": "rtmp://example.com/path/to/stream",

+ ...

+ }

+}

+```

+

+Read more in the API documentation.

diff --git a/documentation/streaming-platform/live-streaming/protocols/srt.md b/documentation/streaming-platform/live-streaming/protocols/srt.md

new file mode 100644

index 000000000..2d1d398d2

--- /dev/null

+++ b/documentation/streaming-platform/live-streaming/protocols/srt.md

@@ -0,0 +1,114 @@

+---

+title: srt

+displayName: SRT

+published: true

+order: 20

+pageTitle: Guide to SRT ingest | Gcore

+pageDescription: A step-by-step tutorial on how to create and stop live streams using Gcore's interface or customer's environment.

+---

+

+# The Secure Reliable Transport Protocol

+

+Secure Reliable Transport (SRT) is an open-source streaming protocol that solves some limits of RTMP delivery. It contrast to RTMP/RTMPS, SRT is a UDP-based protocol that provides low-latency streaming over unpredictable networks. On Gcore Video Streaming, SRT is also require if you want to use the H265/HVEC codec.

+

+## Push streams

+

+Gcore Video Streaming provides you with two endpoints for pushing a stream, a default one and a backup one. The default endpoint is the one that is closest to your location. The backup endpoint is in a different location and used if the default one is unavailable.

+

+By default, Gcore will route your stream to free ingest points with the lowest latency. If you need to set a fixed ingest point or if you need to set the main and backup ingest points in the same region (i.e., to not send streams outside the EU or US), please contact our support team.

+

+### Obtain the server URLs

+

+There are two ways to obtain the SRT server URLs: via the Gcore Customer Portal or via the API.

+

+#### Via the Gcore Customer Portal

+

+1\. In the **Gcore Customer Portal**, navigate to **Streaming** > **Live Streaming**.

+

+ +

+2\. Click on the stream you want to push to. This will open the **Live Stream Settings**.

+

+

+

+2\. Click on the stream you want to push to. This will open the **Live Stream Settings**.

+

+ +

+3\. Ensure that the **Ingest type** is set to **Push**.

+4\. Ensure that the protocol is set to **SRT** in the **URLs for encoder** section.

+5\. Copy the server URL from the **Push URL SRT** field.

+

+

+

+3\. Ensure that the **Ingest type** is set to **Push**.

+4\. Ensure that the protocol is set to **SRT** in the **URLs for encoder** section.

+5\. Copy the server URL from the **Push URL SRT** field.

+

+ +

+#### Via the API

+

+You can also obtain the URL and stream key via the Gcore API. They endpoint returns the complete URLs for the default and backup ingest points, as well as the stream key.

+

+Example of the API request:

+

+```http

+GET /streaming/streams/{stream_id}

+```

+

+Example of the API response:

+

+```json

+{

+ "push_url": "srt://vp-push-anx2.domain.com/in/123?08cd54f0",

+ "backup_push_url": "srt://vp-push-ed1.domain.com/in/123b?08cd54f0",

+ ...

+}

+```

+

+Read more in the API documentation.

+

+## Pull streams

+

+Gcore Video Streaming can pull video data from your external server.

+

+Main rules of pulling:

+

+- The URL of the stream to pull from must be **publicly available** and **return a 200 status** for all requests.

+- You can specify **multiple media servers** (separated with space characters) in the **URL** input field. The maximum length of all URLs is 255 characters and the round robin is used when polling the list of specified servers.

+- If a stream is closed (i.e., its connection is terminated) or there is no video data in the stream for 30 seconds, then the next attempt will be made in the next steps progressively (10s, 30s, 60s, 5min, 10min).

+- The stream will be deactivated after 24 hours of inactivity.

+- If you need to set an allowlist for access to the stream, please contact support to get an up-to-date list of networks.

+

+### Setting up a pull stream

+

+There are two ways to set up a pull stream: via the Gcore Customer Portal or via the API.

+

+#### Via the Gcore Customer Portal

+

+1\. In the **Gcore Customer Portal**, navigate to **Streaming** > **Live Streaming**.

+

+

+

+#### Via the API

+

+You can also obtain the URL and stream key via the Gcore API. They endpoint returns the complete URLs for the default and backup ingest points, as well as the stream key.

+

+Example of the API request:

+

+```http

+GET /streaming/streams/{stream_id}

+```

+

+Example of the API response:

+

+```json

+{

+ "push_url": "srt://vp-push-anx2.domain.com/in/123?08cd54f0",

+ "backup_push_url": "srt://vp-push-ed1.domain.com/in/123b?08cd54f0",

+ ...

+}

+```

+

+Read more in the API documentation.

+

+## Pull streams

+

+Gcore Video Streaming can pull video data from your external server.

+

+Main rules of pulling:

+

+- The URL of the stream to pull from must be **publicly available** and **return a 200 status** for all requests.

+- You can specify **multiple media servers** (separated with space characters) in the **URL** input field. The maximum length of all URLs is 255 characters and the round robin is used when polling the list of specified servers.

+- If a stream is closed (i.e., its connection is terminated) or there is no video data in the stream for 30 seconds, then the next attempt will be made in the next steps progressively (10s, 30s, 60s, 5min, 10min).

+- The stream will be deactivated after 24 hours of inactivity.

+- If you need to set an allowlist for access to the stream, please contact support to get an up-to-date list of networks.

+

+### Setting up a pull stream

+

+There are two ways to set up a pull stream: via the Gcore Customer Portal or via the API.

+

+#### Via the Gcore Customer Portal

+

+1\. In the **Gcore Customer Portal**, navigate to **Streaming** > **Live Streaming**.

+

+ +

+2\. Click on the stream you want to pull from. This will open the **Live Stream Settings**.

+

+

+

+2\. Click on the stream you want to pull from. This will open the **Live Stream Settings**.

+

+ +

+3\. Ensure that the **Ingest type** is set to **Pull**.

+4\. In the **URL** field, insert a link to the stream from your media server.

+5\. Click the **Save changes** button on the top right.

+

+

+

+3\. Ensure that the **Ingest type** is set to **Pull**.

+4\. In the **URL** field, insert a link to the stream from your media server.

+5\. Click the **Save changes** button on the top right.

+

+ +

+#### Via the API

+

+You can also set up a pull stream via the Gcore API. The endpoint accepts the URL of the stream to pull from.

+

+Example of the API request:

+

+```http

+PATCH /streaming/streams/{stream_id}

+```

+

+```json

+{

+ "stream": {

+ "pull": true,

+ "uri": "srt://example.com/path/to/stream",

+ ...

+ }

+}

+```

+

+Read more in the API documentation.

diff --git a/documentation/streaming-platform/live-streaming/webrtc-to-hls-transcoding.md b/documentation/streaming-platform/live-streaming/protocols/webrtc-to-hls-transcoding.md

similarity index 75%

rename from documentation/streaming-platform/live-streaming/webrtc-to-hls-transcoding.md

rename to documentation/streaming-platform/live-streaming/protocols/webrtc-to-hls-transcoding.md

index d04e519de..138cac013 100644

--- a/documentation/streaming-platform/live-streaming/webrtc-to-hls-transcoding.md

+++ b/documentation/streaming-platform/live-streaming/protocols/webrtc-to-hls-transcoding.md

@@ -1,81 +1,83 @@

---

title: webrtc-to-hls-transcoding

-displayName: WebRTC ingest and transcoding to HLS/DASH

+displayName: WebRTC to HLS/DASH

published: true

order: 15

toc:

- --1--Benefits of WebRTC and HLS/DASH conversion: "advantages-of-webrtc-andconversion-to-hls-dash"

- --1--How it works: "how-it-works"

- --1--WebRTC stream encoding parameters: "webrtc-stream-encoding-parameters"

- --2--Supported WHIP clients: "supported-whip-clients"

- --2--LL-HLS and LL-DASH outputs: "ll-hls-and-ll-dash-outputs"

- --1--Convert WebRTC in the Customer Portal: "convert-webrtc-to-hls-in-the-customer-portal"

- --1--Convert WebRTC in your environment: "convert-webrtc-to-hls-in-your-environment"

- --2--With the WebRTC WHIP library: "start-a-stream-with-the-gcore-webrtc-whip-library"

- --2--With your backend or frontend: "start-a-stream-with-your-own-backend-or-frontend"

- --2--Play HLS or DASH: "play-hls-or-dash"

- --2--Deactivate a stream: "deactivate-a-finished-stream"

- --2--Demo projects: "demo-projects-of-streaming-with-frontend-and-backend"

- --1--Troubleshooting: "troubleshooting"

- --2--Error handling: "error-handling"

- --2--Sudden disconnection of camera or microphone: "sudden-disconnection-of-camera-or-microphone"

- --2--Debugging with Chrome WebRTC internals: "debugging-with-chrome-webrtc-internals-tool"

- --2--Network troubleshooting: "network-troubleshooting"

+ --1--Benefits of WebRTC and HLS/DASH conversion: 'advantages-of-webrtc-andconversion-to-hls-dash'

+ --1--How it works: 'how-it-works'

+ --1--WebRTC stream encoding parameters: 'webrtc-stream-encoding-parameters'

+ --2--Supported WHIP clients: 'supported-whip-clients'

+ --2--LL-HLS and LL-DASH outputs: 'll-hls-and-ll-dash-outputs'

+ --1--Convert WebRTC in the Customer Portal: 'convert-webrtc-to-hls-in-the-customer-portal'

+ --1--Convert WebRTC in your environment: 'convert-webrtc-to-hls-in-your-environment'

+ --2--With the WebRTC WHIP library: 'start-a-stream-with-the-gcore-webrtc-whip-library'

+ --2--With your backend or frontend: 'start-a-stream-with-your-own-backend-or-frontend'

+ --2--Play HLS or DASH: 'play-hls-or-dash'

+ --2--Deactivate a stream: 'deactivate-a-finished-stream'

+ --2--Demo projects: 'demo-projects-of-streaming-with-frontend-and-backend'

+ --1--Troubleshooting: 'troubleshooting'

+ --2--Error handling: 'error-handling'

+ --2--Sudden disconnection of camera or microphone: 'sudden-disconnection-of-camera-or-microphone'

+ --2--Debugging with Chrome WebRTC internals: 'debugging-with-chrome-webrtc-internals-tool'

+ --2--Network troubleshooting: 'network-troubleshooting'

pageTitle: Guide to WebRTC ingest and transcoding to HLS/DASH | Gcore

-pageDescription: A step-by-step tutorial on how to create and stop live streams using Gcore's interface or customer's environment.

+pageDescription: A step-by-step tutorial on how to create and stop live streams using Gcore's interface or customer's environment.

---

+

# WebRTC ingest and transcoding to HLS/DASH

-Streaming videos using HLS and MPEG-DASH protocols is a simple and cost-effective way to show your video to large audiences. However, this requires the original streams to be in a certain format that browsers do not support natively.

+Streaming videos using HLS and MPEG-DASH protocols is a simple and cost-effective way to show your video to large audiences. However, this requires the original streams to be in a certain format that browsers do not support natively.

-At the same time, WebRTC protocol works in any browser, but it’s not as flexible when streaming to large audiences.

+At the same time, WebRTC protocol works in any browser, but it’s not as flexible when streaming to large audiences.

-Gcore Video Streaming supports both WebRTC HTTP Ingest Protocol (WHIP) and WebRTC to HLS/DASH converter, giving you the advantages of these protocols.

+Gcore Video Streaming supports both WebRTC HTTP Ingest Protocol (WHIP) and WebRTC to HLS/DASH converter, giving you the advantages of these protocols.

+

+#### Via the API

+

+You can also set up a pull stream via the Gcore API. The endpoint accepts the URL of the stream to pull from.

+

+Example of the API request:

+

+```http

+PATCH /streaming/streams/{stream_id}

+```

+

+```json

+{

+ "stream": {

+ "pull": true,

+ "uri": "srt://example.com/path/to/stream",

+ ...

+ }

+}

+```

+

+Read more in the API documentation.

diff --git a/documentation/streaming-platform/live-streaming/webrtc-to-hls-transcoding.md b/documentation/streaming-platform/live-streaming/protocols/webrtc-to-hls-transcoding.md

similarity index 75%

rename from documentation/streaming-platform/live-streaming/webrtc-to-hls-transcoding.md

rename to documentation/streaming-platform/live-streaming/protocols/webrtc-to-hls-transcoding.md

index d04e519de..138cac013 100644

--- a/documentation/streaming-platform/live-streaming/webrtc-to-hls-transcoding.md

+++ b/documentation/streaming-platform/live-streaming/protocols/webrtc-to-hls-transcoding.md

@@ -1,81 +1,83 @@

---

title: webrtc-to-hls-transcoding

-displayName: WebRTC ingest and transcoding to HLS/DASH

+displayName: WebRTC to HLS/DASH

published: true

order: 15

toc:

- --1--Benefits of WebRTC and HLS/DASH conversion: "advantages-of-webrtc-andconversion-to-hls-dash"

- --1--How it works: "how-it-works"

- --1--WebRTC stream encoding parameters: "webrtc-stream-encoding-parameters"

- --2--Supported WHIP clients: "supported-whip-clients"

- --2--LL-HLS and LL-DASH outputs: "ll-hls-and-ll-dash-outputs"

- --1--Convert WebRTC in the Customer Portal: "convert-webrtc-to-hls-in-the-customer-portal"

- --1--Convert WebRTC in your environment: "convert-webrtc-to-hls-in-your-environment"

- --2--With the WebRTC WHIP library: "start-a-stream-with-the-gcore-webrtc-whip-library"

- --2--With your backend or frontend: "start-a-stream-with-your-own-backend-or-frontend"

- --2--Play HLS or DASH: "play-hls-or-dash"

- --2--Deactivate a stream: "deactivate-a-finished-stream"

- --2--Demo projects: "demo-projects-of-streaming-with-frontend-and-backend"

- --1--Troubleshooting: "troubleshooting"

- --2--Error handling: "error-handling"

- --2--Sudden disconnection of camera or microphone: "sudden-disconnection-of-camera-or-microphone"

- --2--Debugging with Chrome WebRTC internals: "debugging-with-chrome-webrtc-internals-tool"

- --2--Network troubleshooting: "network-troubleshooting"

+ --1--Benefits of WebRTC and HLS/DASH conversion: 'advantages-of-webrtc-andconversion-to-hls-dash'

+ --1--How it works: 'how-it-works'

+ --1--WebRTC stream encoding parameters: 'webrtc-stream-encoding-parameters'

+ --2--Supported WHIP clients: 'supported-whip-clients'

+ --2--LL-HLS and LL-DASH outputs: 'll-hls-and-ll-dash-outputs'

+ --1--Convert WebRTC in the Customer Portal: 'convert-webrtc-to-hls-in-the-customer-portal'

+ --1--Convert WebRTC in your environment: 'convert-webrtc-to-hls-in-your-environment'

+ --2--With the WebRTC WHIP library: 'start-a-stream-with-the-gcore-webrtc-whip-library'

+ --2--With your backend or frontend: 'start-a-stream-with-your-own-backend-or-frontend'

+ --2--Play HLS or DASH: 'play-hls-or-dash'

+ --2--Deactivate a stream: 'deactivate-a-finished-stream'

+ --2--Demo projects: 'demo-projects-of-streaming-with-frontend-and-backend'

+ --1--Troubleshooting: 'troubleshooting'

+ --2--Error handling: 'error-handling'

+ --2--Sudden disconnection of camera or microphone: 'sudden-disconnection-of-camera-or-microphone'

+ --2--Debugging with Chrome WebRTC internals: 'debugging-with-chrome-webrtc-internals-tool'

+ --2--Network troubleshooting: 'network-troubleshooting'

pageTitle: Guide to WebRTC ingest and transcoding to HLS/DASH | Gcore

-pageDescription: A step-by-step tutorial on how to create and stop live streams using Gcore's interface or customer's environment.

+pageDescription: A step-by-step tutorial on how to create and stop live streams using Gcore's interface or customer's environment.

---

+

# WebRTC ingest and transcoding to HLS/DASH

-Streaming videos using HLS and MPEG-DASH protocols is a simple and cost-effective way to show your video to large audiences. However, this requires the original streams to be in a certain format that browsers do not support natively.

+Streaming videos using HLS and MPEG-DASH protocols is a simple and cost-effective way to show your video to large audiences. However, this requires the original streams to be in a certain format that browsers do not support natively.

-At the same time, WebRTC protocol works in any browser, but it’s not as flexible when streaming to large audiences.

+At the same time, WebRTC protocol works in any browser, but it’s not as flexible when streaming to large audiences.

-Gcore Video Streaming supports both WebRTC HTTP Ingest Protocol (WHIP) and WebRTC to HLS/DASH converter, giving you the advantages of these protocols.

+Gcore Video Streaming supports both WebRTC HTTP Ingest Protocol (WHIP) and WebRTC to HLS/DASH converter, giving you the advantages of these protocols.

## Advantages of WebRTC and conversion to HLS/DASH

-WebRTC ingest for streaming offers two key advantages over traditional RTMP and SRT protocols:

+WebRTC ingest for streaming offers two key advantages over traditional RTMP and SRT protocols:

-1\. It runs directly in the presenter's browser, so no additional software is needed.

+1\. It runs directly in the presenter's browser, so no additional software is needed.

-2\. WebRTC can reduce stream latency.

+2\. WebRTC can reduce stream latency.

-By using WebRTC WHIP for ingest, you can convert WebRTC to HLS/DASH playback, which provides the following benefits:

+By using WebRTC WHIP for ingest, you can convert WebRTC to HLS/DASH playback, which provides the following benefits:

-* Fast ingest via WebRTC from a browser.

-* Optimal stream distribution using HLS/DASH with adaptive bitrate streaming (ABR) through the CDN.

+- Fast ingest via WebRTC from a browser.

+- Optimal stream distribution using HLS/DASH with adaptive bitrate streaming (ABR) through the CDN.

## Advantages of WebRTC and conversion to HLS/DASH

-WebRTC ingest for streaming offers two key advantages over traditional RTMP and SRT protocols:

+WebRTC ingest for streaming offers two key advantages over traditional RTMP and SRT protocols:

-1\. It runs directly in the presenter's browser, so no additional software is needed.

+1\. It runs directly in the presenter's browser, so no additional software is needed.

-2\. WebRTC can reduce stream latency.

+2\. WebRTC can reduce stream latency.

-By using WebRTC WHIP for ingest, you can convert WebRTC to HLS/DASH playback, which provides the following benefits:

+By using WebRTC WHIP for ingest, you can convert WebRTC to HLS/DASH playback, which provides the following benefits:

-* Fast ingest via WebRTC from a browser.

-* Optimal stream distribution using HLS/DASH with adaptive bitrate streaming (ABR) through the CDN.

+- Fast ingest via WebRTC from a browser.

+- Optimal stream distribution using HLS/DASH with adaptive bitrate streaming (ABR) through the CDN.

-## How it works

+## How it works

+

+We use a dedicated WebRTC WHIP server to manage WebRTC ingest. This server handles both signaling and video data reception. Such a setup allows you to configure WebRTC on demand and continue to use all system capabilities to set up transcoding and delivery via CDN.

-We use a dedicated WebRTC WHIP server to manage WebRTC ingest. This server handles both signaling and video data reception. Such a setup allows you to configure WebRTC on demand and continue to use all system capabilities to set up transcoding and delivery via CDN.

+The RTC WHIP server organizes signaling and receives video data. Signaling refers to the communication between WebRTC endpoints that are necessary to initiate and maintain a session. WHIP is an open specification for a simple signaling protocol that starts WebRTC sessions in an outgoing direction, such as streaming from your device.

-The RTC WHIP server organizes signaling and receives video data. Signaling refers to the communication between WebRTC endpoints that are necessary to initiate and maintain a session. WHIP is an open specification for a simple signaling protocol that starts WebRTC sessions in an outgoing direction, such as streaming from your device.

+We use local servers in each region to ensure a minimal route from a user-presenter to the server.

-We use local servers in each region to ensure a minimal route from a user-presenter to the server.

+### WebRTC stream encoding parameters

-### WebRTC stream encoding parameters

+The stream must include at least one video track and one audio track:

-The stream must include at least one video track and one audio track:

+- Video must be encoded using H.264.

+- Audio must use OPUS codec.

-* Video must be encoded using H.264.

-* Audio must use OPUS codec.

+If you use OBS or your own WHIP library, use the following video encoding parameters:

-If you use OBS or your own WHIP library, use the following video encoding parameters:

+- Codec H.264 with no B-frames and fast encoding:

-* Codec H.264 with no B-frames and fast encoding:

- * **Encoder**: x264, or any of H.264

- * **CPU usage**: very fast

- * **Keyframe interval**: 1 sec

- * **Profile**: baseline

- * **Tune**: zero latency

- * **x264 options**: bframes=0 scenecut=0

+ - **Encoder**: x264, or any of H.264

+ - **CPU usage**: very fast

+ - **Keyframe interval**: 1 sec

+ - **Profile**: baseline

+ - **Tune**: zero latency

+ - **x264 options**: bframes=0 scenecut=0

-* Bitrate:

- * The lower the bitrate, the faster the data will be transmitted to the server. Choose the optimal one for your video. For example, 1-2 Mbps is usually enough for video broadcasts of online training format or online broadcasts with a presenter.

+- Bitrate:

+ - The lower the bitrate, the faster the data will be transmitted to the server. Choose the optimal one for your video. For example, 1-2 Mbps is usually enough for video broadcasts of online training format or online broadcasts with a presenter.

For example, you might have the following settings in OBS:

@@ -83,27 +85,27 @@ For example, you might have the following settings in OBS:

### Supported WHIP clients

-You can use any libraries to send data via the WebRTC WHIP protocol.

+You can use any libraries to send data via the WebRTC WHIP protocol.

-* Gcore WebRTC WHIP client

-* OBS (Open Broadcaster Software)

-* @eyevinn/whip-web-client

-* whip-go

-* Larix Broadcaster (free apps for iOS and Android with WebRTC based on Pion; SDK is available)

+- Gcore WebRTC WHIP client

+- OBS (Open Broadcaster Software)

+- @eyevinn/whip-web-client

+- whip-go

+- Larix Broadcaster (free apps for iOS and Android with WebRTC based on Pion; SDK is available)

### LL-HLS and LL-DASH outputs

-Streams sent via WebRTC are transcoded in the same way as other streams received via RTMP and SRT.

+Streams sent via WebRTC are transcoded in the same way as other streams received via RTMP and SRT.

-At the output, you can view the streams using any available protocols:

+At the output, you can view the streams using any available protocols:

-* **MPEG-DASH**: ±2-4 seconds latency to a viewer with ABR.

-* **LL-HLS**: ±3-4 seconds latency to a viewer with ABR.

-* **HLS MPEG-TS**: legacy with non-low-latency (±10 seconds latency) with ABR.

+- **MPEG-DASH**: ±2-4 seconds latency to a viewer with ABR.

+- **LL-HLS**: ±3-4 seconds latency to a viewer with ABR.

+- **HLS MPEG-TS**: legacy with non-low-latency (±10 seconds latency) with ABR.

For WebRTC mode, we use a method of constant transcoding with an initial given resolution. This means that if WebRTC in a viewer’s browser reduces the quality or resolution of the master stream (for example, to 360p) due to restrictions on the viewer's device (such as network conditions or CPU consumption), the transcoder will continue to transcode the reduced stream to the initial resolution (for example 1080p ABR).

-When the restrictions on the viewer's device are removed, quality will improve again.

+When the restrictions on the viewer's device are removed, quality will improve again.

-## How it works

+## How it works

+

+We use a dedicated WebRTC WHIP server to manage WebRTC ingest. This server handles both signaling and video data reception. Such a setup allows you to configure WebRTC on demand and continue to use all system capabilities to set up transcoding and delivery via CDN.

-We use a dedicated WebRTC WHIP server to manage WebRTC ingest. This server handles both signaling and video data reception. Such a setup allows you to configure WebRTC on demand and continue to use all system capabilities to set up transcoding and delivery via CDN.

+The RTC WHIP server organizes signaling and receives video data. Signaling refers to the communication between WebRTC endpoints that are necessary to initiate and maintain a session. WHIP is an open specification for a simple signaling protocol that starts WebRTC sessions in an outgoing direction, such as streaming from your device.

-The RTC WHIP server organizes signaling and receives video data. Signaling refers to the communication between WebRTC endpoints that are necessary to initiate and maintain a session. WHIP is an open specification for a simple signaling protocol that starts WebRTC sessions in an outgoing direction, such as streaming from your device.

+We use local servers in each region to ensure a minimal route from a user-presenter to the server.

-We use local servers in each region to ensure a minimal route from a user-presenter to the server.

+### WebRTC stream encoding parameters

-### WebRTC stream encoding parameters

+The stream must include at least one video track and one audio track:

-The stream must include at least one video track and one audio track:

+- Video must be encoded using H.264.

+- Audio must use OPUS codec.

-* Video must be encoded using H.264.

-* Audio must use OPUS codec.

+If you use OBS or your own WHIP library, use the following video encoding parameters:

-If you use OBS or your own WHIP library, use the following video encoding parameters:

+- Codec H.264 with no B-frames and fast encoding:

-* Codec H.264 with no B-frames and fast encoding:

- * **Encoder**: x264, or any of H.264

- * **CPU usage**: very fast

- * **Keyframe interval**: 1 sec

- * **Profile**: baseline

- * **Tune**: zero latency

- * **x264 options**: bframes=0 scenecut=0

+ - **Encoder**: x264, or any of H.264

+ - **CPU usage**: very fast

+ - **Keyframe interval**: 1 sec

+ - **Profile**: baseline

+ - **Tune**: zero latency

+ - **x264 options**: bframes=0 scenecut=0

-* Bitrate:

- * The lower the bitrate, the faster the data will be transmitted to the server. Choose the optimal one for your video. For example, 1-2 Mbps is usually enough for video broadcasts of online training format or online broadcasts with a presenter.

+- Bitrate:

+ - The lower the bitrate, the faster the data will be transmitted to the server. Choose the optimal one for your video. For example, 1-2 Mbps is usually enough for video broadcasts of online training format or online broadcasts with a presenter.

For example, you might have the following settings in OBS:

@@ -83,27 +85,27 @@ For example, you might have the following settings in OBS:

### Supported WHIP clients

-You can use any libraries to send data via the WebRTC WHIP protocol.

+You can use any libraries to send data via the WebRTC WHIP protocol.

-* Gcore WebRTC WHIP client

-* OBS (Open Broadcaster Software)

-* @eyevinn/whip-web-client

-* whip-go

-* Larix Broadcaster (free apps for iOS and Android with WebRTC based on Pion; SDK is available)

+- Gcore WebRTC WHIP client

+- OBS (Open Broadcaster Software)

+- @eyevinn/whip-web-client

+- whip-go

+- Larix Broadcaster (free apps for iOS and Android with WebRTC based on Pion; SDK is available)

### LL-HLS and LL-DASH outputs

-Streams sent via WebRTC are transcoded in the same way as other streams received via RTMP and SRT.

+Streams sent via WebRTC are transcoded in the same way as other streams received via RTMP and SRT.

-At the output, you can view the streams using any available protocols:

+At the output, you can view the streams using any available protocols:

-* **MPEG-DASH**: ±2-4 seconds latency to a viewer with ABR.

-* **LL-HLS**: ±3-4 seconds latency to a viewer with ABR.

-* **HLS MPEG-TS**: legacy with non-low-latency (±10 seconds latency) with ABR.

+- **MPEG-DASH**: ±2-4 seconds latency to a viewer with ABR.

+- **LL-HLS**: ±3-4 seconds latency to a viewer with ABR.

+- **HLS MPEG-TS**: legacy with non-low-latency (±10 seconds latency) with ABR.

For WebRTC mode, we use a method of constant transcoding with an initial given resolution. This means that if WebRTC in a viewer’s browser reduces the quality or resolution of the master stream (for example, to 360p) due to restrictions on the viewer's device (such as network conditions or CPU consumption), the transcoder will continue to transcode the reduced stream to the initial resolution (for example 1080p ABR).

-When the restrictions on the viewer's device are removed, quality will improve again.

+When the restrictions on the viewer's device are removed, quality will improve again.

-5\. Allow Gcore to access your camera and microphone. In several seconds the HLS/DASH stream will appear in an HTML video player.

+5\. Allow Gcore to access your camera and microphone. In several seconds the HLS/DASH stream will appear in an HTML video player.

-You’ll see the result under the **Video preview** instead of a black area with the “No active streams found” message. This large window of an HTML video player is the transcoded version of the stream in HLS/DASH protocols using adaptive bitrate.

+You’ll see the result under the **Video preview** instead of a black area with the “No active streams found” message. This large window of an HTML video player is the transcoded version of the stream in HLS/DASH protocols using adaptive bitrate.

-5\. Allow Gcore to access your camera and microphone. In several seconds the HLS/DASH stream will appear in an HTML video player.

+5\. Allow Gcore to access your camera and microphone. In several seconds the HLS/DASH stream will appear in an HTML video player.

-You’ll see the result under the **Video preview** instead of a black area with the “No active streams found” message. This large window of an HTML video player is the transcoded version of the stream in HLS/DASH protocols using adaptive bitrate.

+You’ll see the result under the **Video preview** instead of a black area with the “No active streams found” message. This large window of an HTML video player is the transcoded version of the stream in HLS/DASH protocols using adaptive bitrate.

-A small window in the top-right corner is from your camera. It shows the stream taken from the webcam.

+A small window in the top-right corner is from your camera. It shows the stream taken from the webcam.

-There are also settings for selecting a camera and microphone if you have more than one option on your device.

+There are also settings for selecting a camera and microphone if you have more than one option on your device.

## Convert WebRTC to HLS in your environment

-We provide a WebRTC WHIP library for working in browsers. It implements the basic system calls and simplifies working with WebRTC:

+We provide a WebRTC WHIP library for working in browsers. It implements the basic system calls and simplifies working with WebRTC:

-* Wrapper for initializing WebRTC stream and connecting to the server.

-* Camera and mic wrapper.

-* Monitoring WebRTC events and calling appropriate handlers in your code.

+- Wrapper for initializing WebRTC stream and connecting to the server.

+- Camera and mic wrapper.

+- Monitoring WebRTC events and calling appropriate handlers in your code.

The latest library version, 0.72.0, is available at https://rtckit.gvideo.io/0.72.0/index.esm.js.

### Start a stream with the Gcore WebRTC WHIP library

-Since WHIP is an open standard, many libraries have been released for it in different languages. You can use our WebRTC WHIP or any other library specified in the WHIP clients section.

+Since WHIP is an open standard, many libraries have been released for it in different languages. You can use our WebRTC WHIP or any other library specified in the WHIP clients section.